There’s only one way to grow your deep learning team effectively: by adding new people to it! (We were just as shocked as you are by this revelation!)

Filling your team can be done a couple ways: by recruitment, hiring freelancers, or outsourcing to consulting agencies. Finding talented people is hard enough already, so make sure your newly hired team members hit the ground running and don’t slow down the rest of the team. Depending on your team’s tools, this might however be easier said than done.

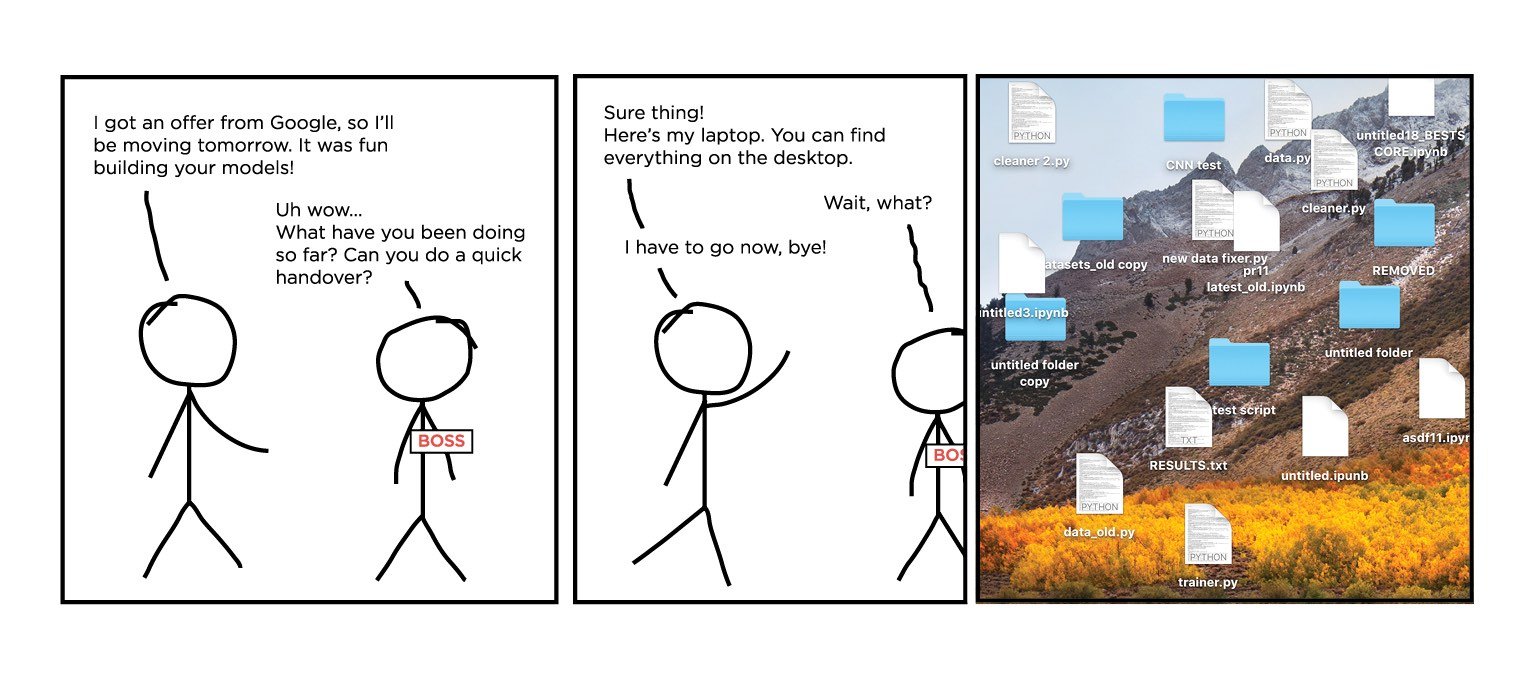

When onboarding new members goes wrong, it can simply be hell; in the worst cases, getting new people up to speed with existing projects, tools, and ways of working often takes several months! The problem is compounded even further if they’re expected to continue the work of a predecessor who’s already left the company.

None of these problems are new however; in fact, many different industries have invested a lot of time into shortening ramp-up times for new team members and mitigating the risks of not knowing what has been done when people leave. In software development, it’s mostly tackled through version control, documentation, and testing. Combined, these tools are a tremendous help in mitigating risks of knowledge gaps and streamlining onboarding.

The same tools and approaches will also serve you well with deep learning. Version control for experiments, results, and environments – while standardizing your workflow – are the main tools you can use to make your new data scientists more efficient and lighten the risks involved with people leaving your team.

Once in awhile, ask yourself “What’s my plan if <PERSON> quit today and someone else had to continue their work?” and take inventory with 5 essential questions:

- What models have they tried in the past? What worked? What didn't? And why?

- What parameter spaces have been explored? What spaces seem the most promising?

- What model is running in production? What dataset was used to train it? What code was used to train it? What metrics did we use to choose it?

- What environment is needed to run the code for training and inference? What version of Python, TensorFlow or PyTorch is required?

- What kind of hardware should the code be executed in? How much GPU memory is needed? How many nodes or GPUs are required?

If you find my questions aren’t giving you the answers you’re looking for, email me at eero@valohai.com and let's discuss how Valohai tackles these issues.