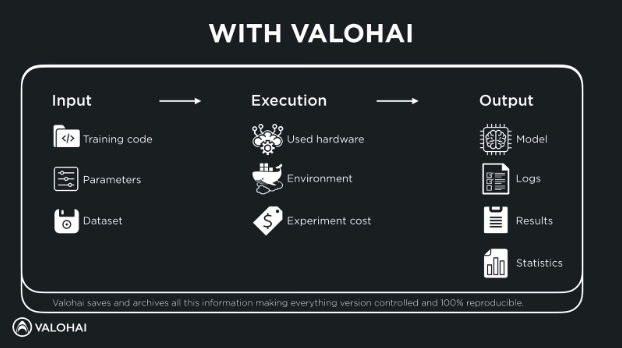

When meeting with teams that are working with machine learning today, there is one point above everything else that I try to teach. It is the importance of storing and versioning of machine learning experiments and especially how many things there actually are that need to be stored.

Most companies today are able to systematically store only the version of code, usually using a version control system such as Git or SVN. Some more advanced teams also have a systematic approach to storing and versioning the models but almost none are able to go beyond that. By systematic, I mean an approach where you are actually able to take a model in production and look at the version of code was used to come up with that model and that this is done for every single experiment, regardless if they are in production or not.

For each experiment you should have a systematic approach for storing the following:

Training code

The exact version of code used for running this experiment. This is usually the easiest, as it can be mostly solved by doing regular software development version control, with Git for instance.

Parameters

One of the important things in running and replicating an experiment is knowing the (hyper)parameters for that particular experiment. Storing parameters and being able to see their values for a particular model over time will also help gain insights and intuition on how the parameter space affects your model. This is exceptionally important when growing your team since new recruits are able to go and see how a particular model has been trained in the past.

Datasets

Versioning and storing datasets is one of the more difficult problems in the machine learning version control, especially when datasets grow in size and just storing full snapshots is no longer an option.

At the same time it is also one of the most important things to store for reproducibility and compliance purposes, especially in light of new and upcoming laws that will increase the requirement for backtracking the decision making done by machine learning models. Explainability becomes more and more difficult when dealing with deep learning. One of the few good ways to start understanding models is by looking at the training data. Good dataset versioning will help you when faced with privacy laws like GDPR or possible future legal queries about e.g. model bias. It will require some work but I recommend tackling this issue early on since it will become exponentially more difficult later on.

Used hardware

Storing hardware environments and statistics serves two purposes: Hardware optimization and reproducibility. Especially if you are using more powerful cloud hardware it is important to get easy visibility to hardware usage statistics. It is way too easy and common today to use large GPU instances and not utilize them to their full extent. It should be easy to see the GPU usage and memory usage of machines without having to manually run checks and monitoring. This will optimize usage of machines and help debug bottlenecks.

The difficulty of reproducibility should not increase one bit when experiments grow in scale and executions are done on multi node clusters. New team members should be able to train the same models on new sets of data with just looking at the configuration and running an execution. Especially deep learning with large data can easily require a setup of several multi-GPU machines to run and this should not bring any kind of overhead to actually running the code. Looking at the history can also help you choose the right cluster size for optimal training time for future runs.

Environment

Package management is difficult but completely mandatory to make code easy to run. We have seen great benefits in a container based approach and tying your code to a Docker image that can actually run it will greatly speed up collaboration around projects.

Experiment cost

The cost of experiments is important in assessing and budgeting machine learning development. Charging granularity in existing clouds using servers shared within a team does not give you useful insights to actual experiment amounts and cost per experiment (CPE). Worst case, you can have multiple teams working within the same cost structure. This makes it impossible to assess the usefulness of investments per project.

Model

Model versioning means a good way to store the outputs of your code. The model itself is usually small and relatively easy to store, but the difficult part is bringing the model together with all the other things listed in this post.

Logs

Training execution time logs are essential for debugging use cases but they can also provide important information on keeping track of your key metrics like accuracy or training speed and estimated time to completion.

Results

Storing a model without results usually does not make a lot of sense. It should be trivial to see the performance and key results of any training experiment that you have run.

To learn more about version control and reproducible tests in Machine Learning, watch a recording of the webinar on Version Control in Machine Learning .