One size doesn't fit all - How the use case affects ML system complexity

by Eikku Koponen | on February 24, 2022Algorithms have become faster, fancier, and more complex in the past couple of years. Still, they haven't gained as much complexity as the systems around algorithms. Especially those machine learning applications that one can honestly talk about in the same sentence with AI.

System complexity tends to compound. For example, complex systems need more orchestration, automation, and rules. But, as a data scientist, I feel I should be able to concentrate on the data and algorithms. In other words, focus on what I'm good at, and leave the rest to those who know more or to platforms that abstract away the complexity.

But the world of engineers is pretty new to us, and MLOps is only emerging. Thus, there's no good shared understanding of how it all works together beautifully.

I'm fond of good visuals summing up the whole concept in a neat understandable package, through real-life examples, not just theoretical stuff. And I think that especially those interested in the whole MLOps, but don't know where to start, need (simple) one badly. So where we go!

I have collected a few familiar examples of data science project flows or pipelines and try to go over the bits and pieces through them now.

What do you actually need? It depends. One size doesn't fit all.

There are as many data science pipelines as there are teams building them and problems being solved. Below we'll look at a few and give you our view on how we see them breakdown into components and MLOps organized around these components. They all contain data and training steps, but the rest can be between nothing and anything.

Another unifying aspect is that these systems need to run on something. For me, it's Valohai because it has all the capabilities that I'm looking for when developing, maintaining, and scaling your data science pipeline. Instead of taking on all the complexity myself, I can let the platform abstract much of it away and focus on the functional pieces of my pipeline.

You can check out one end-to-end pipeline example on Valohai here.

Case Example: Discover Weekly

The risk profile is based on a previous article: Risk Management in Machine Learning. Risk tends to be a key component that makes machine learning systems more complex.

The risk profile is based on a previous article: Risk Management in Machine Learning. Risk tends to be a key component that makes machine learning systems more complex.

One of the classic machine learning (sub)product is Spotify's Discovery Weekly playlist, which as its name says, updates weekly on Spotify. They recommend songs based on the tracks you listened to, but also vast amounts of metadata about the artists and songs.

In essence, they run the predictions in weekly batches based on the current best model resulting in an updated playlist for all users. On a high level, the flow is pretty simple, you gather data, wrangle it, train the recommendation model, and finally do a batch inference for all users. So while users expect an updated playlist every Monday, the solution doesn't have much inherent risk because, at worst, the playlist isn't as good as it could be and users will just switch to another playlist.

To simplify, a pipeline for this use-case could look something like this:

What creates complexity at Spotify is that it has teams of data scientists and other roles working on the product, maybe one with the metadata about the lyrics, the other with the primary recommendation model, and another on the submodel. So they need a common platform where it is possible to work modularly, scripts are possible to re-run without dependency errors, and the one responsible for the final model can track down and make sure the correct version goes into production. Most importantly, the list of recommended songs needs to be produced on a massive scale (180 million paid subscribers!) automatically weekly; thus, stability is required.

In addition to the stability, data scientists might be interested in experimenting on improvements and an easy way to deploy new models using MLOps platforms such as Valohai.

Case Example: Credit Risk

Credit risk is also one of the go-to examples in machine learning applications. Banks have successfully used statistical models to predict their customers' default probabilities for 10-15 years and adopted ML models when they emerged. However, ML model logic is significantly more complicated than a traditional (logistic) regression approach. Financial institutions face another layer of data, ethics, and explainability challenges.

Credit risk is also one of the go-to examples in machine learning applications. Banks have successfully used statistical models to predict their customers' default probabilities for 10-15 years and adopted ML models when they emerged. However, ML model logic is significantly more complicated than a traditional (logistic) regression approach. Financial institutions face another layer of data, ethics, and explainability challenges.

Again, the process flow is pretty simple: get data, train model, get predictions, but differently from Spotify's playlist, credit scores and model output can actually have a huge impact on individuals' financial futures. Even though the end-users aren't necessarily getting the credit risk score directly themselves, interpretability, bias evaluation and explainability are crucial parts of the pipeline.

Inputted data can have mistakes, and external forces can cause significant data drift – not to mention outliers. So you would add those additional checkpoints and steps on your pipeline to make sure that if and when things go wrong, you know why your model predicted what it predicted and promptly be able to correct the mistake.

The pipeline could look like the following:

One of the key things for a financial institution is traceability, especially when things go wrong. So I've considered data testing and monitoring as an essential step in the pipeline above. If that topic sounds interesting, you should check out my blog post on Valohai and WhyLabs.

On top of that, for these types of use cases, focusing on Data-Centric AI is preferable to tinkering with the latest and coolest models.

Case Example: Food Delivery

A notch more complex system is delivery estimates that you see on your food or parcel delivery apps. The algorithms are trained with offline data consisting of many sources and events (driver, restaurant, journey, traffic, etc.). Then, the inference is done online, listening to these events in real-time.

A notch more complex system is delivery estimates that you see on your food or parcel delivery apps. The algorithms are trained with offline data consisting of many sources and events (driver, restaurant, journey, traffic, etc.). Then, the inference is done online, listening to these events in real-time.

According to UberEngineering, one of the primary issues they try to optimize is when their business logic dispatches a delivery partner to pick up an order. So instead of just optimizing the whole route, they optimize on top of optimizing.

An ML system for food delivery could look something like this:

The more complex the system, the more you need infrastructure and platforms. In order to manage production pipelines, model endpoints, and a team of data scientists, an end-to-end MLOps platform starts becoming an absolute must-have.

Complex systems are often easy to draw but tricky to implement, and it becomes much more a product management topic. We've written an article on evaluating the feasibility of AI products.

Build your own Machine Learning products

Valohai is built from the ground up with ML systems in mind. So we've always imagined that teams will build products with Valohai, not just models. Our core principle is to abstract the infrastructure layer without limiting what you can build on Valohai or connect to Valohai.

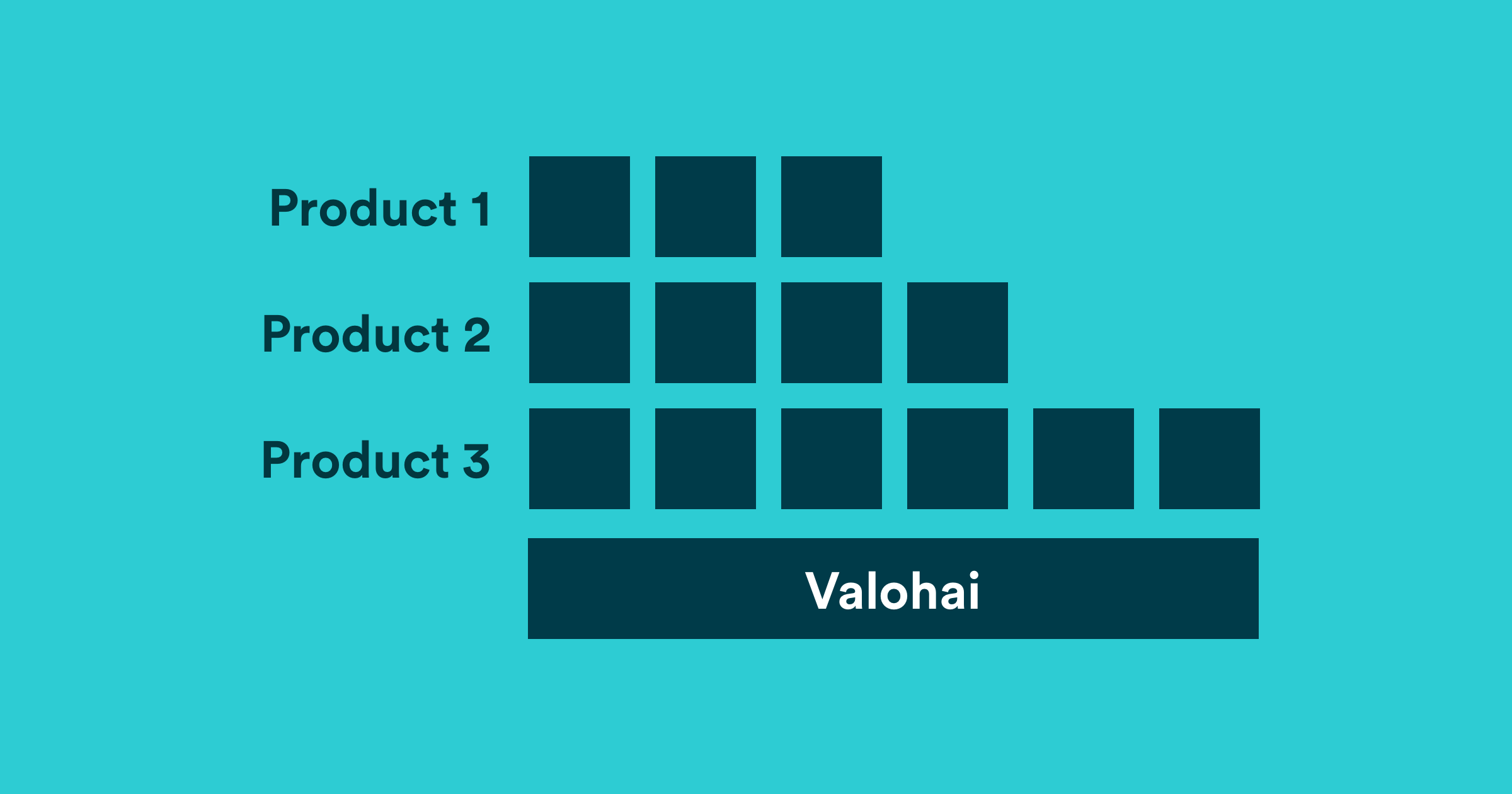

The three examples above show that systems you'll need to build will vary in complexity and focus but having a common foundation should make it easier to collaborate and scale.

The idea on Valohai is that you should be able to turn your Experiment easily into Steps and form a Pipeline of the Steps. In the case of a business doing all of the above examples, we could simply have three different pipelines serving different problems, built using various tools and libraries but still running all on Valohai.

On Valohai, you can run any number of Machine Learning pipelines, and each step in these can be unique (including language, libraries, instance types, etc.).

Here is how typically our users approach building a pipeline. The steps are typical to any data science project and a good starting point for a more production-ready world. If still in doubt, start by reading more on machine learning lifecycles here. Remember, you can build and run pipelines using any language and libraries and add existing reporting, experimenting, and monitoring tools on Valohai.

Start with experimenting

Usually, a data science project starts with experimenting with some data and possible models. Of course, some of these experiments you can do on your laptop, but saving you from the hassle at the next step, one can run notebooks or experiment scripts on Valohai. So, get your data from your bucket or upload the data to the platform, get your script, and you are good to go!

For example, if you prefer Jupyter Notebooks, we have the Jupyhai extension to run a Valohai execution from your local Jupyter notebook.

Split into steps

Once you've got an idea of what you are going to do, it's time to think modularly and split the tasks into steps, and try to embrace some engineering practices to your data science workflow.

Pssst! You should also check out our practical engineering tips series including Docker for data science.

Build a full-scale pipeline

Now that you have a beginning of the pipeline ready, you can easily add more steps to build a complete pipeline for an ML product. This includes adding steps that integrate other services to your pipeline, such as a data ingestion step that queries your data lake, e.g. Snowflake.

On Valohai, models can be served either as pipelines (for batch inference) or as endpoints (for real-time inference). For online inference, check out our documentation on the topic.

Happy pipeline building!