March 16th, we organized a webinar to follow up with our MLOps eBook. Together with our co-authors, we wanted to tackle the goal we set for MLOps in the eBook: "The goal of MLOps is to reduce technical friction to get the model from an idea into production in the shortest possible time to market with as little risk as possible."

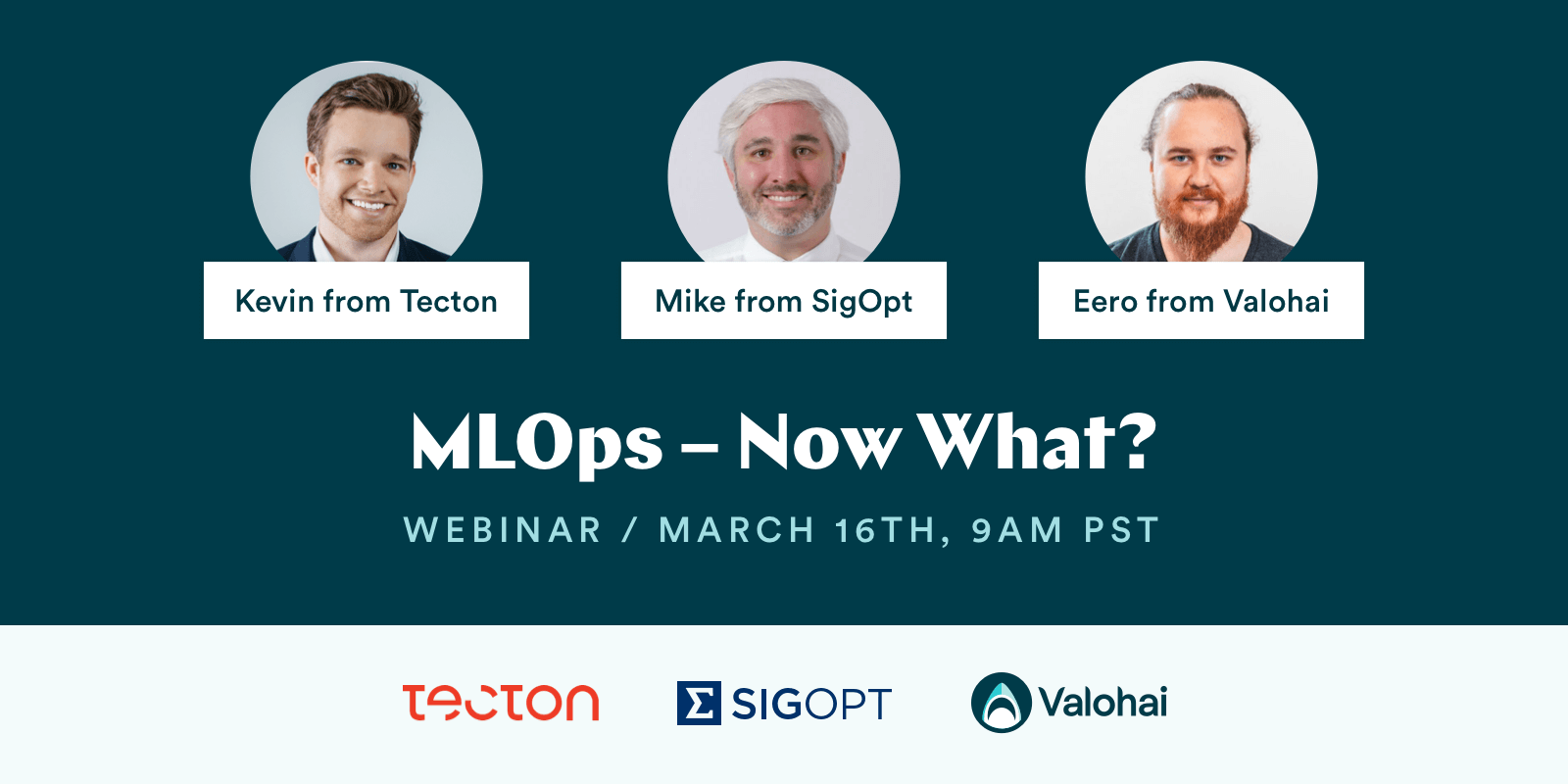

In the webinar, Kevin, Michael, and Eero discussed four different topics with that goal in mind: data, workflow, standardization, and finally, performance.

Thank you to everyone who joined us live, and if you didn't get the chance, check out the recording below. 👇

About the panel

Michael McCourt, SigOpt

Mike studies mathematical and statistical tools for interpolation and prediction. Prior to joining SigOpt, he spent time in the math and computer science division at Argonne National Laboratory and was a visiting assistant professor at the University of Colorado-Denver where he co-wrote a text on kernel-based approximation. Mike holds a Ph.D. and MS in Applied Mathematics from Cornell and a BS in Applied Mathematics from the Illinois Institute of Technology.

Kevin Stumpf, Tecton

Kevin co-founded Tecton, where he leads a world-class engineering team that is building a next-generation feature store for operational Machine Learning. Kevin and his co-founders built deep expertise in operational ML platforms while at Uber, where they created the Michelangelo platform that enabled Uber to scale from 0 to 1000's of ML-driven applications in just a few years. Prior to Uber, Kevin founded Dispatcher, with the vision to build the Uber for long-haul trucking. Kevin holds an MBA from Stanford University and a Bachelor's Degree in Computer and Management Sciences from the University of Hagen. Outside of work, Kevin is a passionate long-distance endurance athlete.

Eero Laaksonen, Valohai

Eero is the co-founder and CEO of Valohai. Valohai was founded in 2016 as one of the first companies to tackle the challenges of MLOps. He is passionate about accelerating machine learning adoption by providing ML teams the tools they need to scale their operations.

The discussion transcribed

[EERO, Valohai]: All right, hey. Welcome, everybody. We still have some people joining, but I guess we have most people here now. First, there will be a recording, so if you want to share this to your colleagues, you're going to get a recording, and you're able to share that. Secondly, welcome everybody.

A few, sort of, housekeeping things. If you have any questions at any point, there is a Q&A feature on Zoom. We're going to be using that for taking questions and feel free to put in questions at any time. We're going to be taking them either at the end of it or then during if it fits well with the conversation flow. Then in terms of timeline, this will take about an hour, maybe. If we have a lot of questions, maybe a little bit more but about an hour is what we're aiming for here. Okay, that's it for housekeeping.

So, let's start with introductions. So, we have three amazing people. I don't know if I can call myself amazing, maybe that's not okay, but at least we have two amazing people, and then one we're not so sure about. Hey, I'm going to let you guys introduce yourselves. So, Mike, you can go first. You're first on my screen.

[MIKE, SigOpt]: Hi. Absolutely. Do you want me to introduce the company to you?

[EERO, Valohai]: Yeah, go ahead. Introduce yourselves and then the company.

[MIKE, SigOpt]: Fantastic. Thank you, Eero. I count you as an amazing person. I've always enjoyed working with you, so I really want to appreciate you for hosting this session. I am Mike McCourt from SigOpt. Now, we're called SigOpt, an Intel company because although we were founded in 2014 by Scott Clark and Patrick Hayes, last November 2020, we were acquired by Intel, which has been a really exciting shift. We've been able to work on a lot of interesting and exciting projects both inside Intel and then continuing to expand outside of Intel.

In particular, what we've been focused on for our entire history has been on the model development process. Starting first with that hyperparameter optimization focus and saying to ourselves, "How do we make smart decisions around model hyperparameters?" Then pushing on to think some about this tracking process of model development, "How do you track how your model has developed over time?" And then, "How do you make sure you can orchestrate things somewhat effectively, especially in this parallel computation setting?"

We have a few core value propositions really at SigOpt. Part of it is this question of integration. How do you facilitate this interaction of hyperparameter optimization? We have all of the various different elements of model development. We'll talk more about that later. Sample efficiency really also is a key driving factor of SigOpt. How is it that we can be as sample efficient as possible, reduce the number of required trainings of your model, and still find you the best model that we can? And in particular, scale, how is it that with some of the decision-making process that we facilitate at SigOpt. How is it we can help you conduct this model development process at scale? I'd like to talk about all of those factors in a little bit in the context of this project we've worked on together, Eero. Thank you.

[EERO, Valohai]: Thanks, Mike. Yeah, and SigOpt is definitely an amazing company if you're looking to optimize models or build really anything at scale in terms of machine learning. Go ahead and check them out. Then let's jump into Kevin from Tecton. Go ahead.

[KEVIN, Tecton]: Hi. Nice to meet you, everyone. My name is Kevin Stumpf, and I'm the co-founder and CTO of Tecton. Tecton is a data platform for machine learning or really a feature store, which helps solve the hardest and most common data problems around machine learning, meaning how do you turn raw data into features which you then provide to your models which are running in production or to your training pipelines which are running in your training production system or to the Data Scientists who's working in a Jupyter Notebook or some other data science environment that I'm sure we're going to talk about here as well so that they can get access to historical features trains their models with it and whatnot.

Before starting Tecton, my co-founders and I, we were all over at Uber, where we built Michelangelo, an end-to-end internal proprietary machine learning platform that all the Data Scientists, Data Engineers, ML Engineers, Software Engineers would use to end-to-end train an ML model, backtest it, evaluate it, and then eventually push it into production, and then use it at Uber's scale in production for really low latency and high-scale use cases.

[EERO, Valohai]: Thanks, Kevin, and also a plug from me on Tecton, an amazing company doing great things in the data management field. If you're stuck with, how do I manage my features in production and training, and so on, go ahead and check them out? Lastly, my name is Eero Laaksonen, and I'm the CEO of Valohai. We're an MLOps company. What we offer is an MLOps platform. We help companies that build custom machine learning models, whether it's like classical machine learning or deep learning.

We help with two major areas being the exploration of models when you're working in something like a Jupyter Notebooks environment. Then going from there into secondly productionizing your training pipelines and deployment of models. There we take care of two major issues, first of all, orchestration. So, pushing your scripts into the cloud, running them on multiple computers at the same time with the hyperparameters we get from SigOpt and the data we get from Tecton and running all of that in a single place, storing all of that information, and then pushing your models into production and versioning all of that. So, that's us in short, and I promise you this is where the plugging will end, and we'll focus more on the beef of the matter.

[MIKE, SigOpt]: Well, I could spend a minute and give a plug for Valohai. I had the great fortune of working with Valohai for this NeurIPS competition over the past year, and I will say I loved using the Valohai system. Incredibly impressive, incredibly effective at scaling, up to thousands of simultaneous computations happening at the same time spread out very effectively over machines. Thank you for working with us on that exciting project here.

[EERO, Valohai]: Thank you. It was a blast. It was really some interesting stuff. If you're interested in optimizing models, go ahead and check out the white papers that came from that. We work together with Twitter, Facebook on a really interesting competition. But yeah, hey, let's get into the topic of the day.

Today, we're talking about the topic of MLOps around an e-book that we co-authored with this group. The main principle of the book is putting the MLOps into perspective and understanding what is the core of MLOps? The main statement of the book is really that the goal of MLOps is to reduce technical friction to get your models from an idea into production in the shortest possible time with as little risk as possible.

We're going to sort of take that principle and then start looking at four major topics today from that looking glass, really going and looking at first of all data. How do you look at data and managing data from that perspective of, again, going into production with as little risk and as little technical friction as possible and getting there as fast as possible? Then workflows, like what can you do around workflows? Then standardization, how does standardization affect this whole stack of machine learning? Then lastly, performance, how do you focus on getting enough performance in a world that keeps changing around you all the time?

Let's start with data. So, of course, one of the key problems is always getting your data, cleaning up your data, working with your data, but one of the key things is of course, allowing easy access and a process that allows you to work around data in an efficient fashion. I think that the one with most credits around this should be Kevin. So, Kevin, do you want to share some thoughts on data in production machine learning?

[KEVIN, Tecton]: Yeah, for sure, and maybe before I go directly into the data field, let's maybe Zoom out and say, hey, what is an ML application, to begin with? Like what are the different artifacts you have to keep in mind as you try to get an ML application into production. To borrow an example that we had at Uber. Imagine an Uber Eats or a DoorDash-type application that tries to make the prediction of, how long is it going to take until the food that you're about to order is going to show up at your doorstep?

Such an ML application consists really of three different applications or three different artifacts. One is the application, which is making the prediction and using the prediction. That would be your microservice that's feeding into the Uber Eats or DoorDash application behind the scenes.

Underneath that is the model, which is able to actually make a prediction, take some input parameters, take some input data in order to predict, how long is it going to take? Is it going to take 17 minutes, an hour, whatever it is? Depending on how busy the restaurant is right now, depending on the restaurant you order from, depending on the location you're at.

Then finally, the third piece, and that's the input into the model, is the data or also oftentimes referred to as really the features, which is transformed, highly condensed, information from the raw data that feeds into the model that makes the prediction which feeds into your application.

So, you have to keep all three artifacts, the application, the model, and the data, into account before you can develop and productionize an ML application. Data, we've seen, is one of the hardest things to get right because for really a multitude of reasons, and I'm going to try to not talk for five hours now about it, but you have to keep in mind like how do you, to begin with, find the right data as a Data Scientist? Where do you go? Do you have a data catalog somewhere in the organization you can go to? Does that show you all the relevant raw data that you may need? Then how do you get permissions to that data? Who do you have to talk to? Are there maybe some legal constraints around what you're allowed to use given the team you're on, given the problem that you want to solve?

Then let's say you got the permission to actually use the data. Now, what do you get? Do you get a CSV dump into your Jupyter Notebook, or do you get some one-off credentials to your Snowflake data warehouse, your Redshift, or maybe to some MySQL Database? Now, you may have this raw data dump in your Jupyter environment. Now you turn this raw data into features by running some transformations which could be just data cleaning operations where you throw out some garbage rose or maybe you're running some aggregations in order to say produce information like what's the 30-minute trailing order count in a given restaurant? You may do all kinds of crazy things in order to make it easier for the model to pick up and identify signals that are related and correlated with what you end up trying to predict, which could be the preparation time for an order.

Now, let's say you've got these features that you're happy with. Now you've got a model in your Jupyter environment which you're happy with, and let's say with Valohai or some other tools, you find a way to actually get this model into production too. Now the question is how do I get features to my model that is running in production, that is running behind some microservice endpoint that needs to make predictions at really, really high-scale and really low latency? Is your microservice behind the scenes now going to ping your Snowflake data warehouse or your Redshift data warehouse in production? Is it going to run these aggregations on the fly?

Most likely, you cannot do this because these operations are going to take way too long, way longer than the time that you have available to make a prediction. If you want to make a prediction 50 milliseconds, you're not going to have the luxury of going to your data warehouse and executing a query on the fly, which may take minutes or hours to complete. So, you have to keep all of these different problems in mind.

How do you get the data the raw data into your development environment? How do you turn this into features in a development environment? How do you make these features available in your production environment? Then how do you make those features even consistently available in production so that you don't introduce what's oftentimes referred to as training-serving skew, where you calculate the features one way in your development environment and then a completely different way in your production environment. If you do this, then your model may look fantastic in your development environment where you run backtests against historical data, but then you throw your model into production and calculate the feature values a different way there, and suddenly, your model just doesn't perform well anymore because you've got because you've introduced the training-serving skew.

These are all the types of problems that Data Scientists today face as they try to productionize a model, and what you most commonly find for companies that don't use a feature store here is that you'd have a Data Scientist who partners up with a Data Engineer or a team of Data Engineers who is actually able to productionize this transformation code, which turns raw data into features and makes the data available in production. Now, of course, you have handoffs involved here where Data Engineers or other teams now have to pick up work from you, prioritize it on their own, put it on their agenda, and now suddenly, it's going to take forever until your model, and your data is in production up and running.

[EERO, Valohai]: Really good points, and I think this sort of rings through with what's in the book and sort of one of the foundations on the book was the research that we did, sort of a large questionnaire on about 300 companies on their state of machine learning. There we sort of saw this transition and maturity level and different boxes of companies on what they were focusing on next. You could like quite clearly see that the companies that didn't have models in production were really thinking in terms of these blobs of data, whereas companies that were already further down in production were thinking more in this dynamic terms of like data flowing through your pipeline that is both for like retraining of models but then also for productionization. Then like trying to figure out how to utilize infrastructure to tackle that discrepancy between like here is here's my training environment, which is completely different, my training data which is completely different than my actual production environment. All right, cool. Hey, how about Michael? What thoughts do you have on data?

[MIKE, SigOpt]: You know SigOpt lives in a very interesting position. It's been designed intentionally so that users do not have to share any data with us, which has its problems in the sense that we can't leverage knowledge necessarily about the data to help accelerate this model development process. But it has its benefits because now customers who are very, very, very secretive, very private, very concerned about their data security can still work with us and still get value out of SigOpt. As a result, we have, I think, a unique perspective in this concept of not just data but also what advice we can give about data.

Yeah, I definitely echo the comments from Kevin about the complexity and maturity of data pipelines as companies get deeper into the modeling and model development process. I think that I'd probably supplement that by saying that this question of your train versus production skew or some sort of bias that's built into the model process is something that is always going to be potentially present. Realistically there's no way to perfectly deal with that, and the reason is because, at the end of the day, when you build a model, you build it on things you know, but at the end of the day, you want to use your model on things you don't know. You want to use it on the future. You want to use it on data you have not seen. You want to use it to make predictions about circumstances which have not yet occurred.

So, I think that one of the key elements here is recognizing that that is going to be an issue, that that is going to be a problem, and using that to guide you in your process of retraining, or accounting for how much certainty you're willing to put behind your models. I think that that varies by industry to industry. I think that various company to company. So, I don't think that there's an easy or obvious answer to that particular situation, but I do think it is something that companies need to be aware of.

What I often recommend is that customers, before they start worrying about trying to build the fanciest, most complicated model ever, start with something simple. Start with the absolute simplest model and watch even that model drift over time or watch inconsistencies with that model over time and ask yourself, okay, now how am I going to account for that in my data? How am I going to account for that in my training test validation split? How am I going to account for that in the development of my backtesting or just in my desire to retrain? So, definitely keep it simple at the start. Watch that. That'll be guidance for other things.

I also want to expand the term data slightly here to include this topic of what might be called metadata, and in particular, this is maybe information about your model development process or information about model building decisions or how even your actual data was structured which isn't itself built into the data but is a fundamental element of the data pushing into the model, the larger model development process, how this model is interpreted. Things like how metrics are being computed, how they're even defined. Things as far as how this model was sort of structured or motivated or who built it because obviously---and we're going to talk about this in a little bit---people changing companies. So, this sort of metadata, a company creating its own data about the model development process I think can be very, very useful in helping companies with this issue of shift over time or this issue of having to look back six months and when this was created, why was it created in a specific way?

I also think another issue about data can be this issue of data augmentation and this question of how does data augmentation get dealt with at a variety of different levels? But I think that's something we can talk about in a different context in a little bit. So, thank you, Eero.

[EERO, Valohai]: Sure, yeah. For sure and continuing on that point of yours about like metadata and all of the other data that is generated during the process and utilization of that, not particularly for actual machine learning but to achieve the goal that the MLOps is trying to achieve.

[MIKE, SigOpt]: The split there between just machine learning versus the model development, this bigger concept here.

[EERO, Valohai]: Yeah, yeah. For sure. So, like stuff like understanding or the Docker image that was used for a particular training or other output artifacts that might be used, whether it's test reports, whether it's error reports, logs on pipelines that crash---all of that stuff. Of course, getting quite far from the actual training data, but also data that is related with the process. From our point of view, that's like being one of the core value propositions of what we sort of talk about a lot. A lot of the time, we see that a lot of companies do struggle with, like, how do we manage all of this?

All right. Hey. I have a few questions here. First of all, there is Eduardo from Mexico is asking about, "Yeah, you already mentioned this MLOps as something to reduce friction then how about technical depth between research and production? Is it possible to consider a talk of this term when talking about MLOps?"

Well, I personally think that, yeah, technical depth is one way to look at sort of the technical friction that is involved in getting into production faster and with less risk. I think the technical depth is sort of inherently means risk and slowness of process when things escalate. So, I think that it's sort of in there. I think that it's a very core concept in machine learning and MLOps. The whole sort of process that we're going through as MLOps is starting to emerge I think started in many ways from the Google paper, which I think was stated something like technical depth in machine learning being the high risk or high---

[MIKE, SigOpt]: High-interest rate credit card?

[EERO, Valohai]: Yeah, that was the topic. A really great paper from the Google research team that sort of started this process. So yeah, I definitely think that it should be a part of this. I see some people raised their hands for asking questions, but can you use the Q&A feature to write your questions so we can pick them up when they come? All right and then how---

[KEVIN, Tecton]: Actually, I want to be really quick about the I think the technical debt and how to reduce this between research and production is an interesting question. I think there's much more we can dive into here when we talk about standardization and one of the later chapters of this talk because, at the end of the day, technical debt is oftentimes introduced when you don't have a standardized process.

What we've seen at Uber and with our customers here at Tecton is that oftentimes if you don't have standardized processes then people build their models, build their feature pipelines, build the data pipelines in one of bespoke ways that afterwards are extremely hard to manage because they just do it one way that maybe just one person understands. Then this person leaves the company then afterwards nobody understands it anymore. There's not a consistent way to solve the same problem over and over again, and that's where standardization comes in and that's where platforms come in that help you actually automate away a lot of these problems. Tecton feature store helps you reduce or minimize the technical debt when it comes to solving the data problems around machine learning and MLOps platforms allow you to reduce the technical debt that you would typically introduce when you try to productionize an ML model.

[EERO, Valohai]: Yeah, exactly, exactly. Then I have another question on what do you guys think? I have seen this very different two types of processes when we talk about structured and unstructured data and how that flows through a sort of machine learning process. I kind of wanted to hear your thoughts on like structured meaning time series or whatever you can put into sort of a database and then unstructured being stuff like images, audio, NLP. We sort of see this as very different looking processes from like feature generation point of view, feature management point of view, and very different types of tools being adopted in those fields. How do you feel about that?

[KEVIN, Tecton]: Yeah, and then there is also the semi-structured data in between that is not necessarily just unstructured or fully structured, and yeah, when you talk about data management here and looking at very different tools, it really depends on which parts of the data management lifecycle you look at. You could try to simplify it by looking at extract transform and a load. Of course, with a transform piece of it, yes, you would typically find fairly different tools that transform your structured data versus your unstructured data or your semi-structured data. There's still common systems that we oftentimes see, though, to extract, say, the data to begin with, whether it's structured, semi-structured, or unstructured. For instance, you could use Spark for that. Spark is pretty good to extract unstructured as well as fully structured data from your data lake or other data sources.

The transform piece, you've got way more variants here, and then the load piece is interesting too. Like, where do you store, where do you manage this type of data? You would typically see that you've got your data lake, which is where you can actually throw everything at, whether it's fully structured data or fully unstructured data. Data warehouses, as of today, are more suitable to store structured or semi-structured data. I know there's much more of a push now to actually bring much more unstructured data in, so it's going to be interesting to see a couple years from now to what extent we would still see this clear divide of what types of data do you manage in a data lake versus a data warehouse. But as of today, that's how I see the difference between those two or really three categories.

[MIKE, SigOpt]: I would supplement that with saying from my perspective, at least when I think about how customers are approaching this, I do see a pretty strong divide between the types of customers who are in particular dealing with times or at least the types of SigOpt customers who are dealing with time-series data versus the types of customers who are dealing with, let's say, image data. Obviously, as I mentioned, customers aren't giving us their data. So, I don't know exactly what they're doing, but in discussions with them, it does feel that when people are working with time-series data, they often seem to be building their own strategies much more frequently than when people are working with, let's say, image data, where perhaps there's this wide swath of literature talking about how all of these different tools are already the best thing that you can be creating. Now, some of this may be, let's say, selection bias, and it just happens to be the customers who like to use SigOpt with their time-series tend to want to build their own tools or their own methodologies. But that is a split that I've seen at least again amongst SigOpt customers, and I'm not sure if that trend carries out into larger segments of the ML community.

[EERO, Valohai]: I certainly do see that, but I could go on for a long time about that particular topic seeing how different everything from like augmentation and so on looks in these types of environments. But I want to move on to the next topic.

Next, we're going to be talking about workflows, and just to prime that, for me, it really looks like machine learning in production environments is sort of a superset of the talent you need in building a full-stack application. So, it's like yeah, we're building a production environment and production solutions, but on top of that, we have this weird new concept of data that needs to be up to a certain level of quality. The computations are much larger and much more complicated. All of a sudden, you need GPU clusters, or you need huge data warehouses and not just that like a simple Kubernetes cluster where you can shoot your Python application or whatever and you know it works. It's not that simple anymore.

Obviously, the technical workflows that are required to manage and build these types of systems are going to be much more complicated. For instance, I personally see it as a big hurdle for Data Scientists to be able to catch this huge amount of talent that they need to sort of deliver and to be able to build systems. I think one of the main points of MLOps is really to take that required full stack capability and abstract it away, and let you focus on a smaller subset that can be actually managed by people outside of that, like .001% unicorns that can do everything in the world that we always read about, but don't really exist in the real world.

How do you guys see sort of workflows around machine learning and production? First, let's go with Kevin around data. How do you see that process happening in involves and data?

[KEVIN, Tecton]: Yeah, definitely, and to me, there is almost like two layers of workflows here. There's the workflow that the Data Scientist, the person who's responsible for training the model, coming up with features, and deploying it, is directly seeing. Then there is the underlying technical workflow. Ideally, you want to have a system in between that allows the user-facing workflow that translates that down to the much more intricate, technical workflow under the hood. If you don't have that, then the user, the Data Scientist, will again have to work with a Data Engineer to spin up data pipelines, which read the raw data, turn it into features, store it somewhere, and then serve it in production.

The way we solve this with Tecton, or the way we solved it beforehand at Uber, is that the workflow that the user directly interacts with is, they would be in their modeling environment. They would connect to their raw data sources. Then they would just define their feature transformation, whether it's a SQL code, or whether it's some Pyzbar code, or it's just some Python code by itself that describes how do I want to turn my raw data coming off of a batch source or off of a stream like a Kafka Source or a Kinesis Source? How do I want to turn this into feature values? Then afterward, they can train their model against it.

Now, once they've changed their model against it, they want to now be able to productionize this data. The way this works with a feature store is that you would typically commit this feature definition, which has a name, and which contains the transformation code to your feature store. Which then behind the scenes now starts spinning up these far more intricate technical workflows, where you would have an orchestration system, which is aware of what types of data pipelines should be running at the moment. Do I need to spin up a Flink Pipeline, or do you need to spin up a Spark Pipeline? Which at any given moment connects to the raw data source, turns into feature values.

Now, what do you need to do with these feature values? Where do I need to put them? Well, most likely, if you have a model that's running in production at high-scale and low latency, you need to store some of these feature values in a key-value store, some data store that is able to respond very, very quickly within just a couple of milliseconds. Okay. That's great.

You have your feature store, solved that problem, and productionize the feature value, so you can use them in production. But then you also need the ability to fetch, later on, these feature values to train your model. Because you want to take a look at what did the world look like at any given point of time in the past as a Data Scientist as you trained your model? These data pipelines, which your future store manages, they would also take the output of the data pipelines and then store them in a data store where then, later on, can go to in order to look at the historical feature values that you then again use to train your model, while you, of course, get the guarantee that you're not introducing this trained-service skew that we talked a little bit about earlier.

[EERO, Valohai]: Totally makes sense and the same thing from our point of view, sort of, for training workflows. Again, just like when you want to submit a data transformation. You don't want to manually go ahead and start those Kafka runs or Spark jobs and spin up clusters and so on. The same thing with us is like when you want to run a notebook on a GPU cluster or GPU machine. You don't want to manually log into AWS and spin up a machine and deploy a Docker image and then login there and start running and manually put everything down and store your logs in between manually. Doing all of that sort of DevOps work or manual work in between just to get that notebook running. Let it being a production pipeline that needs to be run every ten minutes on a large-scale. So, definitely a lot of touchpoints there between data and then training. How about Mike? Any thoughts?

[MIKE, SigOpt]: My thoughts about workflow are that speed matters, consistency matters, and consistency is sort of born of maybe communication. It's born of documentation. Kevin just mentioned this question of, "What did the world look like six months ago?" I'm using the feature as it exists now, but did it look different when somebody trained this model previously.

So, I think that there's important elements both in terms of going quickly and pushing things faster, but then in terms of consistency and communicating things effectively. Realistically, maybe that contributes to or is in some ways one of the causes of this tech debt concept is when you let someone work on their own in their own little setting, all by themselves, they may be able to work very fast but then pushing that into production in a consistent solid fashion, communicating the results of what happened is more complicated. It is maybe then less consistent, or that is the part that then becomes slow.

I think that one key element here as far as having an effective model development workflow is this question of collaboration. How is it that you are documenting things that are going on? How is it that you are documenting the shift in things over time and are doing so in such a way that people in various different aspects of your work can speak about that? I know we're going to talk more about this later, but I'm bringing it up now because I think that this does affect workflow. I think that in this context then it can affect people's workflows who, in their mind, they know what they like. They know how they like to work.

So, it can be very much a personal experience that your workflow, the way you like to push things for, the way you like to DEV things. But it can cause friction with the larger workflow of trying to push things into production. So, in that context, I think it is pertinent in this topic.

Parallelization and just this question of developing multiple models simultaneously or more likely, within the development process, parallelizing different aspects of this workflow, different aspects of feature development, or different aspects of hyperparameter decision making. I think that parallelization is a key element in making sure things are going as quickly as possible while still keeping things documented. There's obviously this sort of trade-off mathematically as far as going to a higher parallelism as things are going on somehow. Things are in flight with less information than you'd like them to be. But, at the end of the day, I think that's one of the best ways to speed things up without losing this ability to communicate and document things.

Really, I'm just going to mention one of our customers, a large consulting firm, that talked to us about really a 30%, they saw a productivity gain. They claim they saw the productivity gain by being able to cut down from, maybe, multiple days to just maybe 20 hours' worth of time in this model development process. Through coming up with a better workflow, through coming up with a workflow where things are being done sampled efficiently with penalization. And where things are being effectively documented so that it's easier to look things up and push things forward. So, I will definitely say that a solid workflow we've seen, and we've had customers coming to us that a good workflow has given them tangible benefits that they've been able to document.

[KEVIN, Tecton]: Yeah. I think I want to double down on this really quick. I think the importance of speed here, Michael, that he brought up is super, super important because like we've actually seen both at Uber beforehand when we started before, we built Michelangelo, the ML platform, where it would actually take six months or 12 months to get an ML model into production. We see the same thing with a lot of our customers before we engage with them, and it's not just where you actually now suddenly are twice as fast, but it's several orders of magnitude faster. What you can accomplish by actually providing a great workflow to the customer, a great standardized workflow to the customer, which we're going to talk about in a second, which then allows you to automate a ton of stuff that gives you this multiple orders of magnitude of a speed up which at the end of the day allows the Data Scientists to really rapidly iterate on their ideas, put them out into production and test them, which you really need to do as a Data Scientist where you're experimenting with things by the definition of your job where you don't really know ahead of time whether your model is going to actually perform well in production. If you always have to wait six months or even a couple of days, it's going to drastically slow down your productivity. You want to do whatever you can to put great workflows in front of the user, standardize them, automate them, so you can shrink it as much as possible down to basically the speed and the efficiency that we're now used to as Software Engineers, where we can write a line of code and get into production literally within minutes.

[EERO, Valohai]: Yeah, and I think that sort of contains the whole core of what's happening in this MLOps space. I think it's just that same development that we saw in software development in the last like maybe 20 to 30 years. Where a hero coder might mention this person, who can alone move fast and get stuff may be done in a relatively short period of time, but when the complexity requires you to have more than one person working on it, it all falls apart when you don't have standardization.

Then, of course, on the other hand, we now think of these old ways of working very inefficient, where you have to wait for a long time for any code to be tried out and so on. I see customers that have training times of three weeks, two weeks, something like this, and just training time long. They think it's fine, and then you sort of put it into an Excel sheet and look at the return on investment, and you realize that, okay, maybe it's not a good idea.

Yeah, and that brings me to my next question. Do you have any awesome like dirty stories from the fields? I would love to hear a few like really bad workflows. We've been talking about good workflows, but any real-world examples on stuff that you've seen in the wild?

[MIKE, SigOpt]: I'll give one. This is a little dirty. I mean, at the end of the day, maybe it did something for them, but in particular, speaking from this a hyperparameter optimization perspective, making these decisions. We actually did have one prospect at the time converted into a customer eventually who was choosing every parameter, independently of every other parameter.

This customer would choose ten different values for learning rate, pick whichever one was the best. Then fix that and then go to momentum, and then pick ten different values for that, whichever one was the best. Then would go to the dropout number, and pick whichever one was best, and then and then bounce between that. I think there was one other parameter.

They keep doing this, and you know what? At the end of the day, it was working for them. They were happy with that partly because they didn't know they could be doing better. But then they started talking to us, they realize, oh, actually these things have some sort of joint interaction, and if you do that strategy, you'll get something. I mean, it's not going to be the end of the world, but you could do a lot better than that.

Really, it was an important realization for me realistically because I had just assumed, oh, it's obvious that if you think about all these parameters simultaneously with each other, things will be a lot better. It's not obvious. If you do not communicate that to people, and for people out there watching, if you're not aware of this, there is a lot of complexity in this process of choosing intelligent parameters, and especially choosing intelligent parameters may be from different parts of your workflow.

You can do all these things independently, and you will get something, and it may be fine, but it may not be fine. If you work under the assumption during your development process that things that are actually fine are not fine because they're being executed in the wrong way, you are going to hurt yourself. You are preventing yourself from seeing the maximum return from your model development process.

[EERO, Valohai]: So, that would take more time, right?

[MIKE, SigOpt]: It either takes multiple time, or you spent the same amount of time but got a much worse result.

[EERO, Valohai]: Yeah, yeah.

[MIKE, SigOpt]: That's really, I think, the thing that I would emphasize about the workflow thing is that you know, do your research. There are people out there talking about what are the right strategies and what are the right mechanisms for your particular problem. Read up on it, and make sure that you have a sense of what makes the most sense because otherwise, you're selling yourself short, and you're not doing as well as you could be.

[EERO, Valohai]: All right. Kevin, any dirty stories? You don't have to share if it's more confidential.

[KEVIN, Tecton]: No, I mean, honestly, just if you look at how the data problems around machine learning are solved today, they're all very painfully solved. If you don't have a data platform for ML or a feature store in production because again, what you see is the Data Scientist builds something in their Jupyter Notebook using some Python code. Now, they have to go over to this Data Engineer or the ML Engineer who now re-implements at some point in the future everything that the Data Scientists already did. To now calculate everything differently in production, and it's almost as if you imagine that a Software Engineer for any new feature that they're going to develop they first implement it in Python. It's one person, and then that person is now completely hands-off as this capability gets put into production.

That Developer now just hands basically this Python code to some Java Engineer, who re-implements everything again, who's now ultimately responsible for it, who may not even understand the business logic in the first place, but just line by line goes through it and translate the code from Python to Java. Then puts it in production and who's then responsible for the uptime, the reliability, and the functionality of this thing. It's basically absurd, and that's what you still see in in in lots of places where they are trying to solve the data problems around the ML without a feature store.

[EERO, Valohai]: I couldn't have put it in any better way. Totally what's happening. I have one dirty story quickly. I think it's a classic on anybody who's doing deep learning and GPUs. I have heard this story so many times, but people end up leaving clusters on cloud environments. The biggest invoice I've heard of was $20,000 over a weekend for no compute being done whatsoever in AWS. They had a little bit of a talk with supervisors on Monday when they realized it. Well, so, this can rack up some pretty big costs.

Okay, but hey, we have to jump forward. I'll take a few quick questions here first. I have two questions that are around workflows and especially CI/CD. Henry from Brazil is asking, "How can automated tests be inserted? In the context of MLOps, are tools used the same as usual CI/CD? I could maybe take that one since what we kind of call ourselves is CI/CD for machine learning.

I think that the main principle and ideology behind everything is the same. The things that where we see traditional CI/CD tools break down, and I've, by the way, seen Jenkins Workflows built to run machine learning, and to a certain extent, it can work. But when you start getting larger amounts of data, you start needing more complex compute. So, it's pretty hard to spin up a GPU cluster from Jenkins and make it effective.

On top of that, it's pretty hard to manage, what was the version of data, what were the particular output artifacts from a particular training run that then went to the next step of testing? This data flow that you need to have in your machine learning workflow, the pipeline where data flows, doesn't really exist in CI/CD context for software development, and that's where the things start breaking down. Then there was another question that was basically the same thing on CI/CD. Anybody else have anything on this or want to comment?

[KEVIN, Tecton]: Yeah, I just want to add really quick. I think this is actually super important. You want to be able to drive your MLOps or your full ML productionization process with a CI/CD pipeline and CI/CD process. The same thing applies to solving the data problems for machine learning. I published a blog post about this last year, which is called "DevOps for ML Data," which I think is the key to actually really speeding up the development and the deployment of ML applications and solving the data problems under the hood.

The way we solve this with Tecton, for instance, is that you actually define all your features and the feature transformations in a declarative way in files. They're just backed by your GitHub repository or wherever you want to store it. Then you have a CI/CD pipeline, which uses a little CLI that Tecton provides, which you can use to run tests against the feature definitions. You also use to then productionize the feature definition, which you would also use to roll back any changes that you've made. So, what you're now able to do is you can create a new feature definition, check it into Git, have a pull request, have somebody else review it, merge it into master, merge it into your staging branch, kick off a CI/CD pipeline, test the feature definitions, and then when you're happy, then you've got successful tests.

You then productionize the features, push them into production, which then, behind the scenes, actually spins up the data pipelines. Now, everything uses the exact same workflow and the exact same tools that you're used to for your software development process around Git and CI/CD pipelines.

[EERO, Valohai]: Really great point, and I think we're getting close to this concept of it used to be infrastructure as code. Now, I think we're going to start seeing data as code.

[KEVIN, Tecton]: Exactly.

[EERO, Valohai]: Then, on the other hand, what we think in the same exact terms for, like running the processes of training and running testing and augmentation and so on, on data, and building models and productionalizing or pushing models into production. The same thing. Like, every definition needs to be in code. They need to be committed to Git. They need to be tested. They need to be reproducible so that when you have those features, and you use them in your training pipeline, all of that is actually documented from raw data all the way to the actual model that runs in production. You can always backtrace it. If something breaks down upstream, you can always call to any of those points and pull that, let's say, to your exploration environment, and start working with Jupyter Notebooks to understand, is my data wrong? Is my code wrong? What's happening here?

Okay. Hey, let's move to the next topic of the day. There's a lot of questions, but I'll pull some in at some point, but we need to get through the agenda. Standardization, so one of the issues that we've already maybe talked about here a little bit. Where do we want to allow as much free flexibility around tooling, and working with data, building models, solving those business issues, and where do we want to draw the line so that your organization can actually grow. Maybe sort of talking about standardization in machine learning, the way I see it, it's like I mentioned, the same thing that happened in software development, which is, sort of, how do we go from these hero Developers into teams that can grow exponentially so that we can also deliver exponentially?

If you look at companies like, for instance, Facebook, who, at the height of their growth, probably recruited thousands of people over a month and still have those people be effective in building that software. How do we build a standard enough environment so that you can do that on the data site and the machine learning site too, and how do we still allow as much flexibility as possible? This is a drastically fast-changing, fast-paced environment.

Like, we, for instance, see that when we started, TensorFlow almost didn't exist. It was a joke. Then everybody was [inaudible 00:52:10] is the coolest thing out there. Now, it's been like maybe five generations of different frameworks, but that we're the king in just a few years. What we ended up doing is drawing the line in.

You can use whatever tool there is. Everything runs on Docker. So, there's already good tools out there that give you a good point of abstraction. We chose Docker. Whatever tool you want to use, you can, but you also have to deliver the image that can reproduce those results. So, yeah, you can use whatever you want, but you also have to make sure that the next guy who's coming into this project can also do it. How about Mike? Do you have any thoughts on standardization?

[MIKE, SigOpt]: One sort of larger thought, which is that standardization is extremely important for this onboarding process, exactly as you mentioned, the context of Facebook. It's also important in this term process where you're losing people potentially. Salesforce talks about this from this perspective of when your salespeople are leaving. They're stealing from you because they're taking their Rolodex with them out of the company, and that was their key pitch to companies is make them put their Rolodex in the database.

The same logic in some ways applies here. Obviously, I don't think there's a nefarious sense to things so much in the ML process, but I do think it is a very common situation people come in. People leave. I mean, the half-life of a Data Scientist, Data Engineer, in some of these places is a couple of years, maybe even less in some places. So, you have to deal with that, and standardization is one mechanism for dealing with that.

The elements of this that I'm going to say, and this isn't a tool, but this is something we talk about a lot with our customers is it has to really have buy-in at the top, and it has to have buy-in every port down the food chain. Because if individuals don't feel supported by their management, then they are just going to do whatever they're happy with, and that's what ML people oftentimes have grown up with right now, in the current state of the world, is they've done what they feel comfortable with. Because that's how they got their current job was doing what they do best, not standardizing on some broader tool that exists at the company.

Then to push that higher, the only way you can get the standardization is if somebody higher up in the food chain says, "Guys, this is what we have to do." But not just says it, gets the buy-in from everybody else in the company, gets this desire to work in this flow. So, I guess that's more meta than some sort of technical product, but that's been the main discussion we've had with our customers. It's like, look, believing in standardization isn't a person-by-person thing. This has to be an organizational decision.

[KEVIN, Tecton]: Yeah. I think if you want to make it really easy for people in your company to use machine learning in production, there is no way around standardization. The simple reason for that is that standardization leads to automation, and it allows for automation, and automation leads to rapid iteration. As we've established earlier, you need to be able to iterate rapidly in order to make progress with ML really, really quickly.

Oftentimes, people think, oh, there is this one-to-one trade-off between, say, standardization and flexibility, or the speed that you get out of it. But oftentimes, you can just limit the flexibility a little bit, like go from 100% flexibility to just 90% flexibility and vastly speed up all your workflows. You get like a 10x, 100x speed improvement of getting things into production if you just limit the flexibility a tiny bit.

That's what we saw with Michelangelo at Uber, where you had 20 ML training algorithms to choose from and not anything possibly in the world. As a result of that, people were able to train their models and get them into production within just a couple of hours. The same thing with Tecton in our feature store, where, yes, you can use SQL, PySpark, and Python to express your feature transformations, but not any possible way, any possible other language. As a result of that, you get your data into production, again, within hours rather than weeks or longer than that.

[EERO, Valohai]: Great. Great point. Let's see. Do we have any questions here regarding the topic? Here's one on the Salesforce example. So, on the Salesforce example, "Is it the data that is more important when you move companies or the training model that filters the data? I can understand owning the data, but should algorithms be protected, or should the designer be allowed to walk away?"

I think here well, Michael, you can also answer in your own words, but I think what Mike was trying to say is not that you're stealing the company's data or you're stealing the company's IP. It's more that when a Data Scientist leaves, it's a matter of whether they leave a notebook with a desktop full of Jupyter Notebooks files that are named like Untitled_One_Best Score_Old, and then you have absolutely no idea how they came up with the models that they generate.

Again, another dirty story that I've actually seen in the real world, but they leave an actual standardized process where somebody else can then come in and continue working on that. Is that right, Michael?

[MIKE, SigOpt]: Yeah, that's exactly right. I'm not so much talking about ownership of the idea so much as documentation. Can other people follow what you did, reproduce what you did, or did you effectively just leave them with, yeah, sure, a notebook that's Untitled_One_Title_Best_Old_Redone_. Yeah, which I mean, I'm guilty of that too. I have done that in the past. But as an organization, you have to strive to want to get past that, and if you do, there are benefits for doing so.

[EERO, Valohai]: Cool, all right. I don't think we have any other questions on that topic, so let's get to the last topic of the day, which is performance. We're going to finish at just over an hour, so going a little bit past. Getting your models into to perform in production, I think that there's so many things that relate to it, whether it's data quality to changes in the world, whether your model affects the behavior of people, and then you have that coming in as a feedback. There's so many things. I think if we're talking about like performance and optimization, of course, SigOpt, you're the kings of this space, so I'll give this first to Mike.

[MIKE, SigOpt]: I think that the optimization of metrics is extremely important, but for me and when I'm discussing things with customers and prospects, the most important thing at the beginning is the definition of metrics. People have to agree that there is something, which is actually pertinent in a business context, and that agreement needs to be sort of shared across a variety of different stakeholders at the company.

So, this definition of metrics and agreement of value and metrics is the most important step in the process because if you go out and optimize a metric that you thought was valuable, and it turned out that it was actually not correlated with business success or even somehow negatively correlated, which we have seen at our company. Customers thought some metric was really valuable, and it was negatively correlated with business success, and they were really confused. That's just part of the process is that if you optimize the wrong thing, you're going to really hurt yourself.

Beyond that, yeah, studying multiple metrics at the same time, I think is extremely important, and whether you're studying those multiple metrics in the context of trying to study this trade-offs and understand the trade-offs that have to be taken between metrics or whether you're imposing them as sort of thresholds, minimum performance thresholds, and then saying, "Well, I want good outcomes that satisfy at least this inference speed, no more than this power consumption, no more than this model size, at least this accuracy, no more than this number of false positives on this class. I think incorporating all those metrics into your process is very valuable and gets you closer to immediately recognizing business value.

[EERO, Valohai]: I totally, totally agree with that. I have another story from the fields. I just had a talk with a friend who's a senior Data Scientist, and he told me what's the difference between him being a junior and a senior. When he was a junior, he was building a lot of models requested by business, and as a senior, but what he's doing is he's asking why and not building models anymore at all. Because he's just asking like, "Why do you need this metric?" Then they're like, "Hm, good point."

[MIKE, SigOpt]: That is exactly what I'm talking about, and if you can build the one right model instead of trying a million different things, you can get more buy-in from different stakeholders at your company.

[EERO, Valohai]: Yeah, totally.

[MIKE, SigOpt]: Yeah, I couldn't agree more with that. The interesting thing to me is like what we've seen, is that once you then have a model that is the right one that can actually drive the right business metric, then typically, it's not actually the model running in production that breaks anymore. We know how to manage this artifact in production, how to serve predictions behind a microservice, and then when it goes down, we can restart it. Those are fairly solved problems.

However, what happens is not the model breaks but the data breaks, and so now the model initially, yes, it was performing well, but eventually, the performance starts to degrade, and there can be so many reasons for that. Typically, the reasons that we see are the data reasons, and the most obvious one is that maybe the world is just changing, and your model, as we talked about earlier, when you trained it, only looked at what the world looked like at any given point of time in the past, but now the world is changing as the model is running in production. So, suddenly, it hasn't seen these patterns of the new reality ever beforehand when it was trained, and so you need to now retrain your model.

So, how do you even know to begin with whether you have to do this? Typically, what you want to do there is you want to look at the distributions of your features when you trained your model and the distribution of those features when you're making predictions in production. For numerical features, does the mean roughly look the same? Does the standard deviation roughly look the same? For categorical features, what categories did you see when you trained your model? What categories do you now see in production as you're making predictions if the feature distributions, the categories, get massively out of whack? The only thing you can expect for the model to do is to perform pretty poorly, and that is now a sign for you that you have to retrain your model against the new state of the world.

Of course, other more obvious problems are you may just have an outage. They may just be upstream. Suddenly, no new data gets landed anymore. Now, you only have basically garbage features in production, so you get the typical garbage in garbage out types of predictions. Now, you have to go up and find the owner of the data pipeline who now goes in and fixes it.

It can get even worse than that, where what we sometimes see is where you have subpopulation outages where your model may actually perform pretty well in the US, but it doesn't perform super well in Finland right now. You only look at the performance metrics globally, and there's just more predictions happening in the US than there are in Finland. So, overall, things look still fairly healthy, but once you start zooming into the subpopulation, you'd notice, oh, crap. We have a pretty bad outage in Finland, so somebody should go in there and fix it. Unless you have right data monitoring and feature monitoring in place as well, you're probably going to be in for a hard time to identify and debug those issues.

[EERO, Valohai]: Yeah, I totally agree, and I could maybe join in on that sort of world-changing. I often get asked by more junior organizations, like companies that are just starting their journey in machine learning, "What does this mean that the world is changing," and I guess the answer is, "Anything and everything."

I think that a lot of people think that world-changing meaning like that there's a fundamental change in the behavior of humans, for instance. That's not usually what it means. It can be that, but it can also be dust on the factory floor going on your cameras. It can be somebody pushing a new version of production code that replaces the distribution of some value from one to zero to one to a hundred by accident. It can be a new version of a sensor coming from a vendor where the specification is that the vibration will be from zero to one, but then the value will actually be from one to a hundred, again. There's so many different things, and it's often not the actual behavior of human people. It's actually just something upstream in your data input or your code that is changing, and you just don't notice it because the systems get so complicated that it's completely impossible to understand this.

All right. Any thoughts on this while I look at some questions? If we have anything good coming. Now is a good time to enter your final questions before we close up the stream.

[MIKE, SigOpt]: Yeah, any last questions, anybody? Oh, somebody asked for the reference I see. I don't remember the exact reference, but it was something like machine learning is the high-interest credit card of something. I think if you Google that, you'll probably find it. Yeah, I don't remember exactly.

[EERO, Valohai]: Yeah, machine learning, technical depth, and high-interest is what I use by the paper.

[MIKE, SigOpt]: Yes. Yes, there it is. Yes.

[EERO, Valohai]: You will find it. It's by Google, and they actually wrote three papers on it, I think like building on top of each other. The final one was, I think, the rubric for machine learning, so this sort of framework for testing your whole machine learning pipeline all the way from like data testing into production monitoring, and so the first principles in that.

It's very thorough, and it gives you a lot of perspective because they did a survey. They built the rubric on like, this is the level that we think things should be done at, and then they did a questionnaire inside Google from different teams that were doing machine learning at Google, and everybody scored horribly. So, if you're not doing well with the subject that we talked about today, you're not alone. Even the best in the world are struggling with this. It's a new topic. It's a new world. It's very complicated. Luckily, now we're getting tools that are making it easier. It's no longer you have to code your own version control software, for instance, like we mean like it used to be at some point for software development. Now, we're starting to see these tools emerge that make it easier.

[MIKE, SigOpt]: Yes.

[EERO, Valohai]: Okay. I'll take one final question from here. "Any recommended techniques for retraining model and data drift?" I think Kevin, do you have any thoughts on data drift?

[KEVIN, Tecton]: I mean, basically, just continuing what I said earlier. You need to have the right monitoring in place, to begin with in order to identify model drift or data drift. Then you need to have the right automation in place, which is listening for those changes in data, and then this automation now needs to have the ability to kick off your MLOps your CI/CD pipeline, which is now able to actually retrain your model and push it in production.

So, you can wire all of this up. It's not too trivial to do it. We've done it at Uber. We help our customers at Tecton to do it too, but that's basically, these are the rough steps that you have to go through in order to enable it.

[EERO, Valohai]: Cool. Hey, any other closing words?

[MIKE, SigOpt]: Thank you. Thank you for hosting, Eero.

[EERO, Valohai]: Okay, hey, thank you. Thank you, Mike. Thank you, Kevin. Thank you, everybody, for your attendance. This was really amazing. A lot of interesting topics. A lot of great questions. A recording will be out, so keep an eye out for that.

If you're interested in the topics, there is an e-book that is available. You can go on at least our website. I think everybody's website should have it to find the "Practical MLOps" e-book. It contains a lot of information on the higher-level topics discussed here and goes a lot deeper and gives you more things to think about. So, thank you, everybody. Have a great day.

[KEVIN, Tecton]: Thank you.

[MIKE, SigOpt]: Take care.