Valohai Ecosystem

Slurm

Manage your ML workflows on Slurm clusters

The Valohai MLOps platform makes it easy to orchestrate ML workloads on High-Performance Computing and multi/hybrid cloud without changing your preferred ways of working.

- Agnostic orchestration with auto-scaling and auto-caching systems

- Automatic versioning with complete lineage of your ML experiments

- Unlimited integrations with your favorite technologies through API

Experience High-Performance Computing like never before!

ML Pioneers across industries choose Valohai

The MLOps platform purpose-built for ML Pioneers

Valohai is the first and only cloud-agnostic, MLOps platform that ensures end-to-end automation and reproducibility. Think CI/CD for ML.

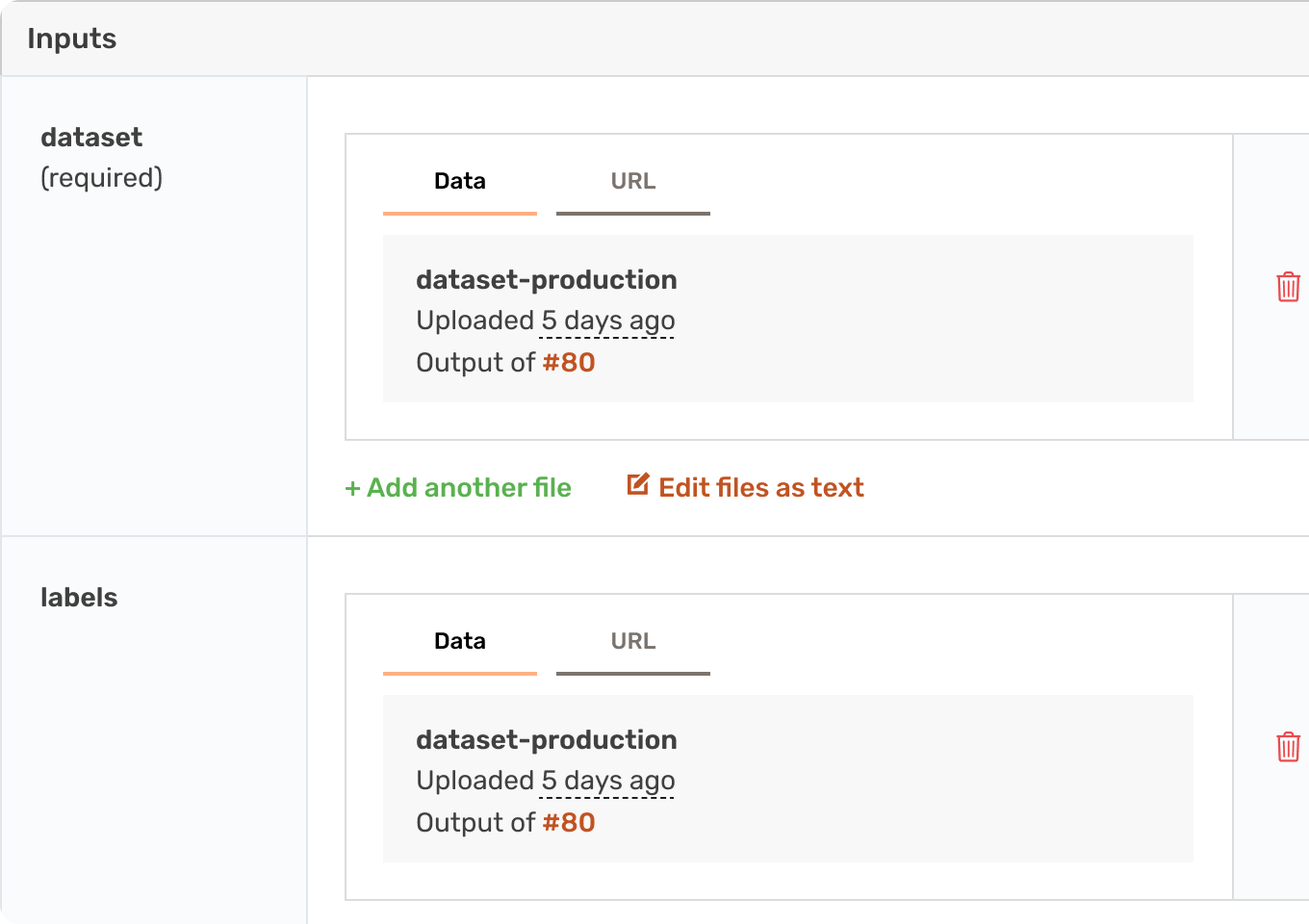

Knowledge repository

Store and share the entire model lifecycle

Collaborate on anything from models, datasets and metrics.

With Valohai, you can:

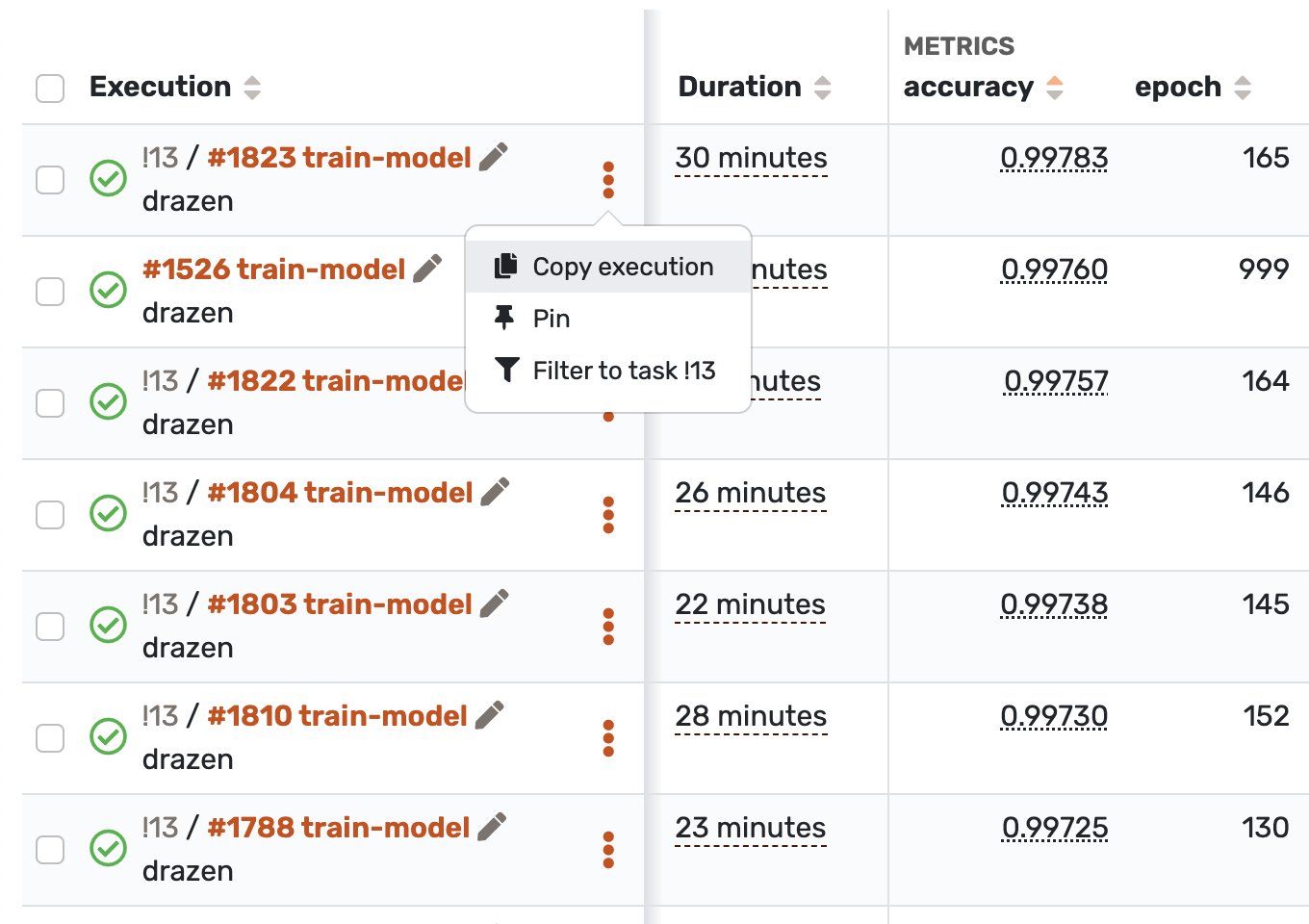

- Automatically version every run to preserve a full timeline of your work.

- Compare metrics over different runs and ensure you & your team are making progress.

- Curate and version datasets without duplicating data.

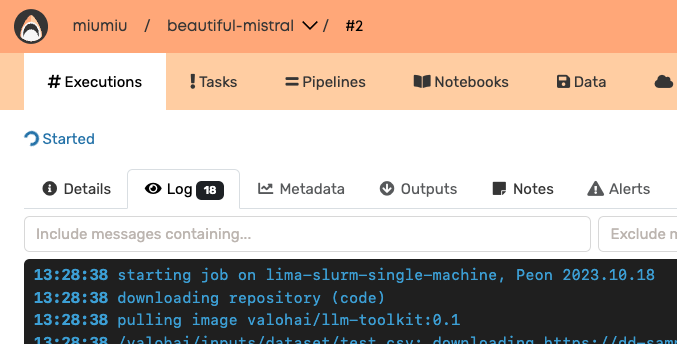

Smart orchestration

Run ML workloads on any hybrid multicloud with a single click

Execute anything on any infrastructure with a single click, command or API call.

With Valohai, you can:

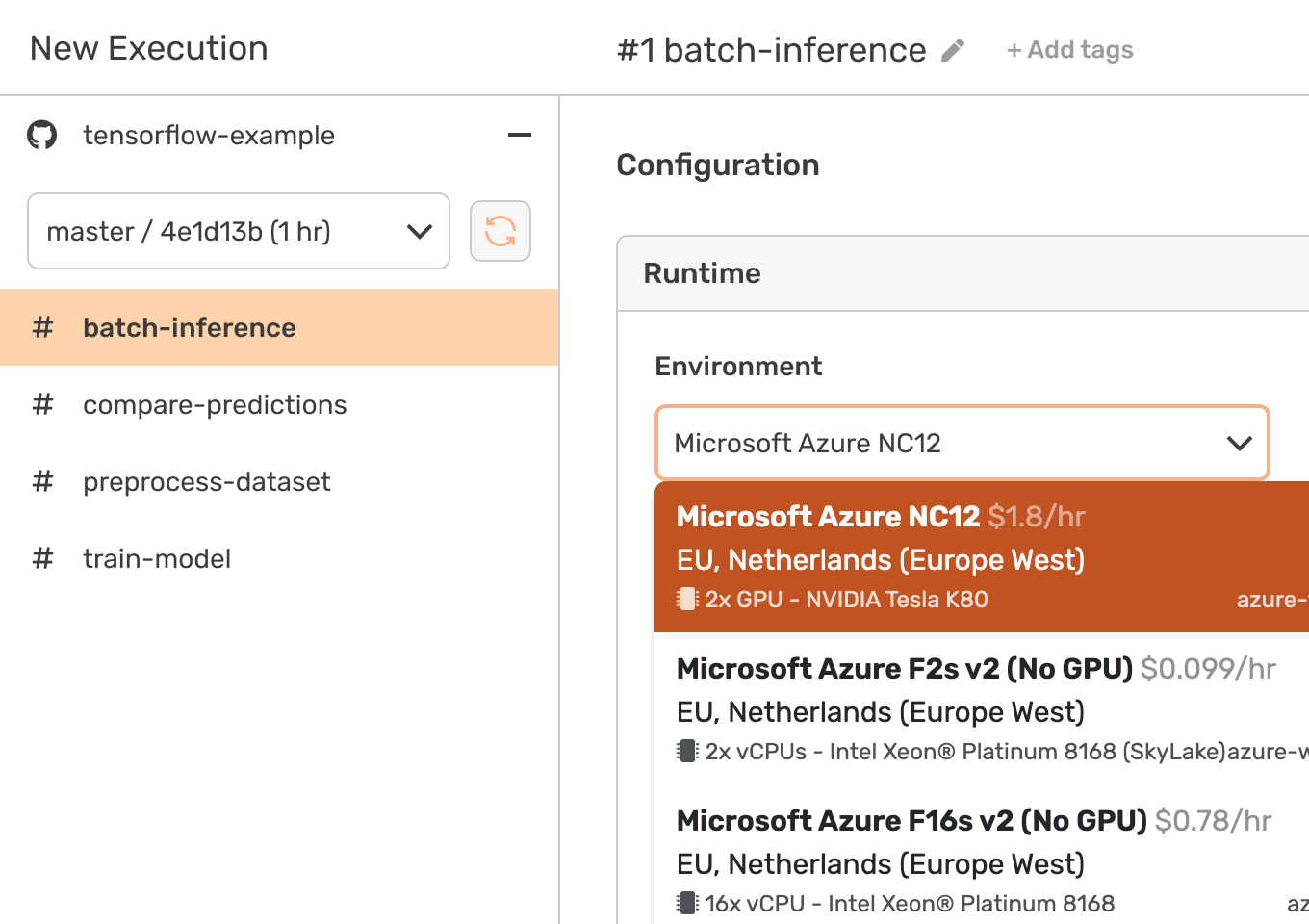

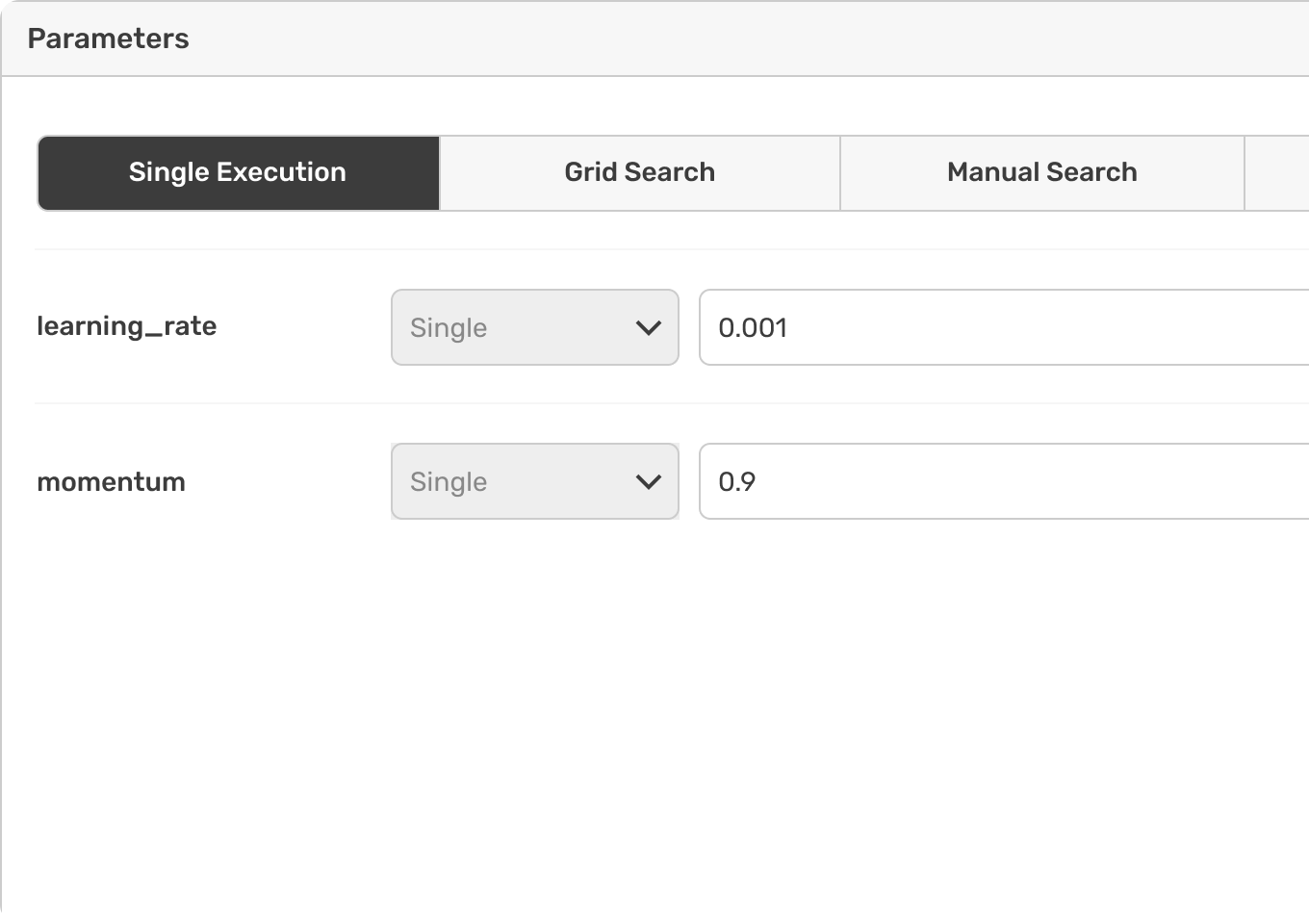

- Execute anything on any infrastructure with a single click, command or API call.

- Orchestrate ML workloads on any cloud or on-premise machines.

- Deploy models for batch and real-time inference, and continuously track the metrics you need.

Developer core

Build with total freedom and use any libraries you want

Your code, your way. Any language or framework is welcome.

With Valohai, you can:

- Turn your scripts into an ML powerhouse with a few simple lines.

- Develop in any language and use any external libraries you need.

- Integrate into any existing systems such as CI/CD using our API and webhooks.

We don’t have to think too much about committing to one cloud provider. We can develop everything within the multi-cloud setup under Valohai with minimal changes to our code.

Petr Jordan, CTO at Onc.AI

Building a barebones infrastructure layer for our use case would have taken months, and that would just have been the beginning. The challenge is that with a self-managed platform, you need to build and maintain every new feature, while with Valohai, they come included.

Renaud Allioux, CTO & Co-Founder, Preligens