Multi-Cloud Machine Learning

Executive Summary

80% of companies rely on hybrid cloud for their IT infrastructure strategy. Machine learning initiatives are often the exception to the rule and companies are focusing on a single cloud instead. This approach has major drawbacks: cost and vendor lock-in.

We have identified four paths which companies often explore to move away from single cloud to hybrid cloud in their ML initiatives. These paths come with their own pros and cons depending on which stakeholders’ perspective you evaluate.

Multi-Cloud is the Default (for Traditional Software)

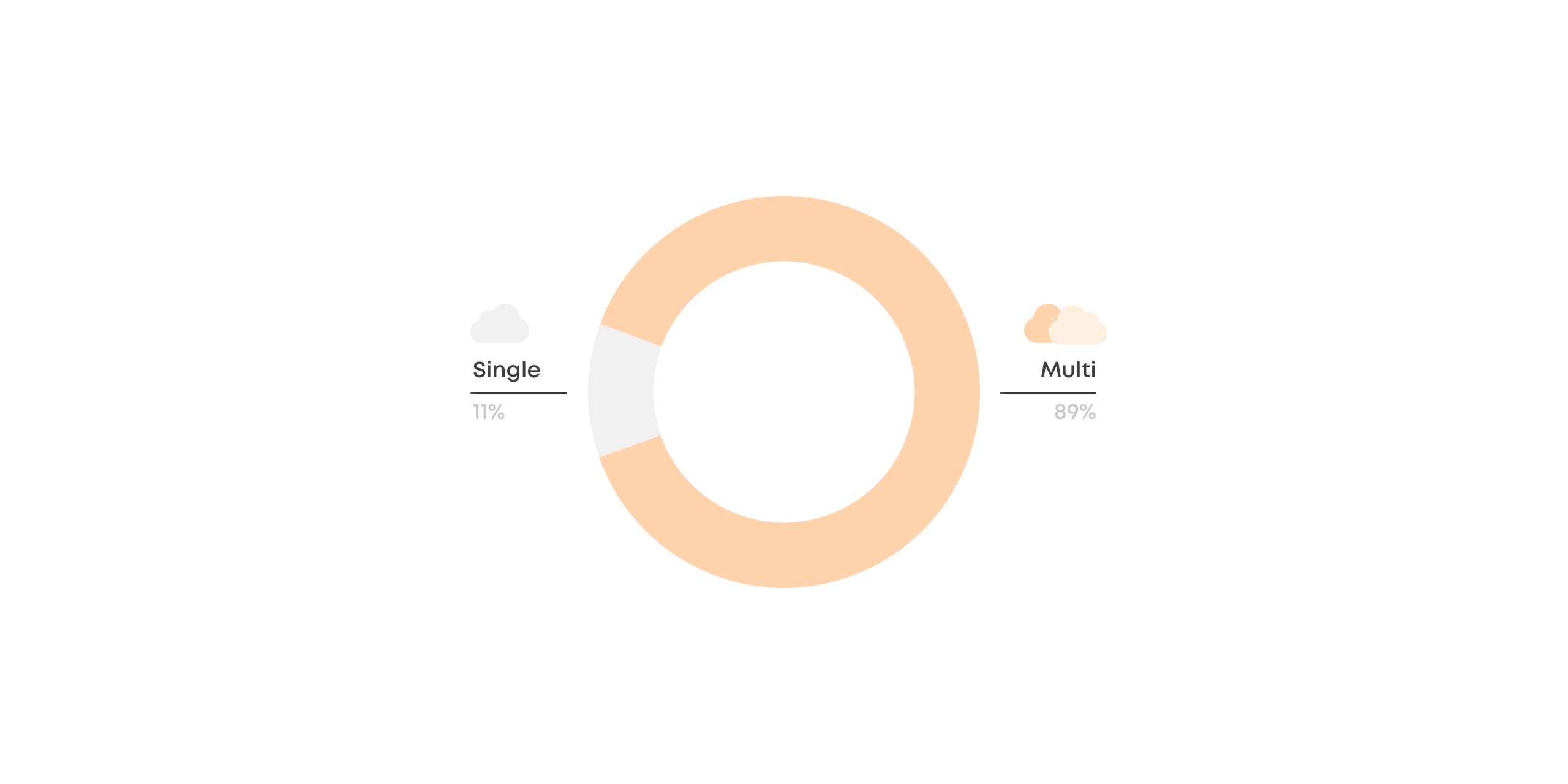

The State of the Cloud Report 2022 from Flexera reveals that out of all companies using the cloud, 89% have a multi-cloud strategy meaning they use more than one computing provider. The study aggregates 753 respondents from various sizes, industries, and geographies.

While multi-cloud is the explicit default as a company-wide strategy, many organizations are not exercising it within their ML initiatives. In fact, multi-cloud is often an afterthought when it comes to ML infrastructure.

There is a reason why ML is lagging, but we'll get to that later.

First, let's take a closer look at how the cloud pie is sliced.

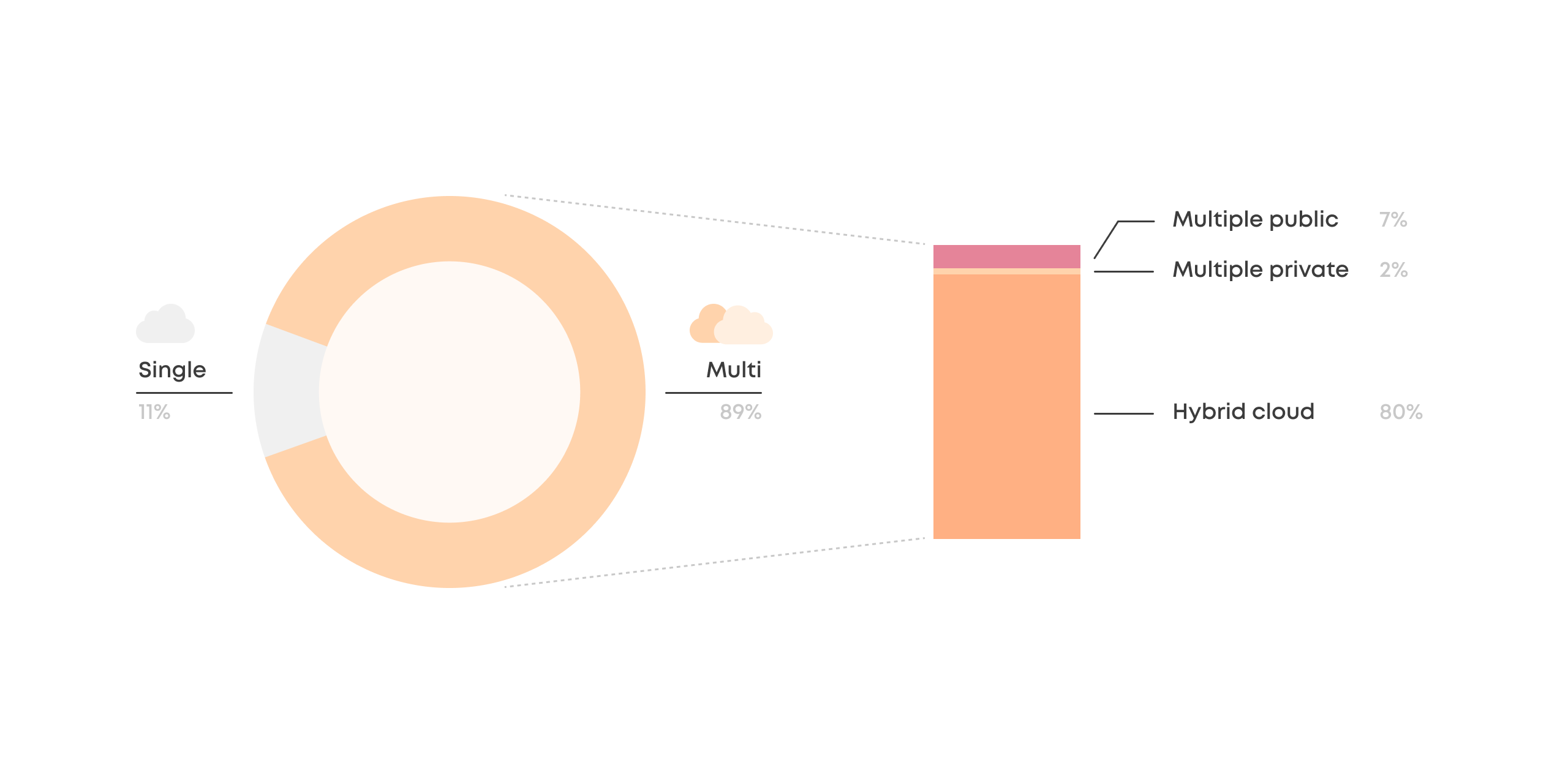

The Multi-Cloud is a Hybrid Cloud

Companies with a multi-cloud strategy predominantly use both public and private clouds simultaneously. This strategy is called the hybrid cloud, and according to Flexera’s report, 80% of all companies utilize the hybrid cloud strategy.

This makes sense as the hybrid cloud offers the best of both worlds:

- Flexibility of the public cloud.

- Security and cost optimization of the private cloud.

Multiple public clouds are used by 7% of companies, and while they offer some diversification and cost leverage, it's far from the benefits of the hybrid cloud strategy.

The Hybrid Cloud ML is on the Rise

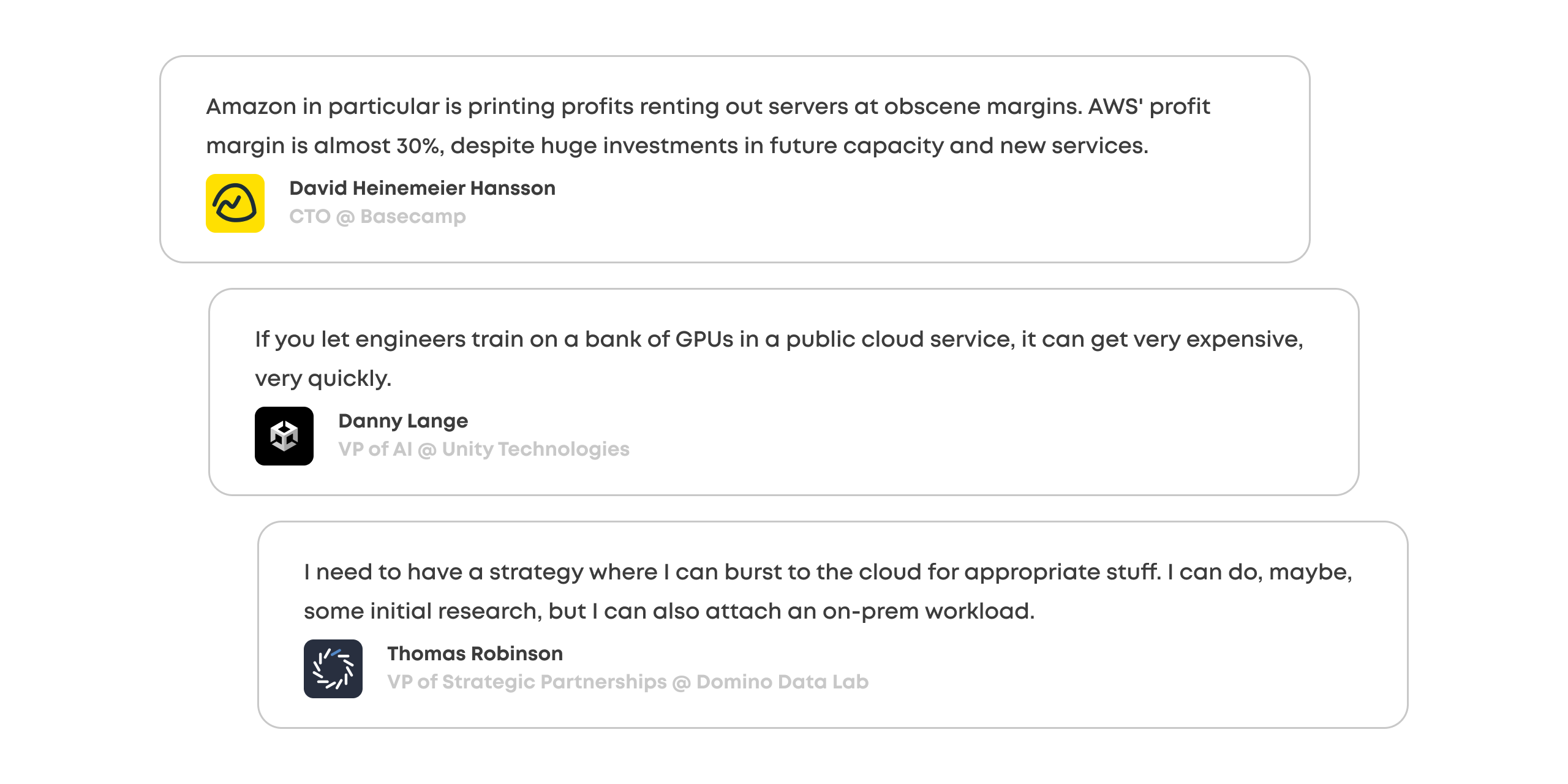

A public cloud is ideal for workloads that need to scale flexibly without downtime, e.g. to meet sudden usage spikes. Plenty of ML workloads fit this description, especially when it comes to using models for real-time inference.

The flexibility of the public cloud does come with its drawbacks:

- Huge cost margins, especially for ML-specific computation (Sagemaker)

- Not compatible with the industry regulations

- Vendor lock-in

There’s a large part of ML where public cloud’s flexibility isn’t a requirement and workloads aren’t as time-sensitive, including model training and batch inference. In these areas, there are ample opportunities for cost optimization using on-premise and private cloud infrastructure.

While the companies have already shifted to a hybrid cloud strategy, why is it not exercised in ML despite the apparent opportunities?

The answer is simple: ML is more complicated than traditional software development.

Let's go deeper to understand why.

Quotes by David Heinemeier Hansson, Danny Lange & Thomas Robinson

From Single to Multi-Cloud

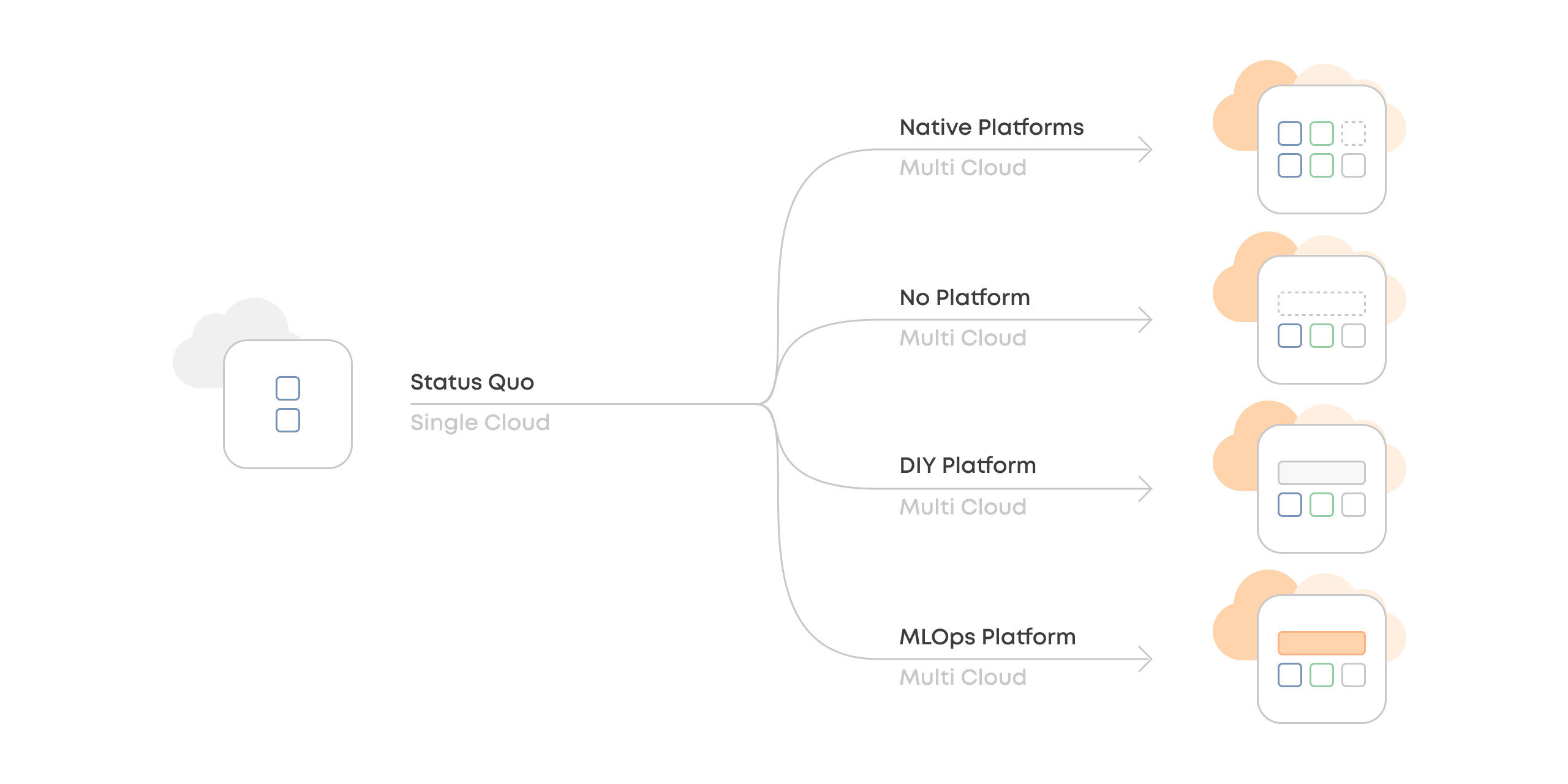

Most ML teams are in a status quo of a single cloud provider and often utilize the ML platform native to that cloud. The path to multi-cloud turns out to be more complex than a straightforward yes/no decision.

We’ve identified four different paths that companies tend to explore when they migrate from single-cloud ML to multi-cloud ML:

- Native Platforms

- No Platform

- DIY Platform

- MLOps Platform

Each option has different tradeoffs and may be more or less ideal depending on the stakeholder. Therefore, we evaluate these paths using three key areas (User Experience, Maintenance Cost and Infrastructure Cost) and provide three stakeholder perspectives (Business Owner, IT and Data Scientist) for each path.

Let's look at them one-by-one.

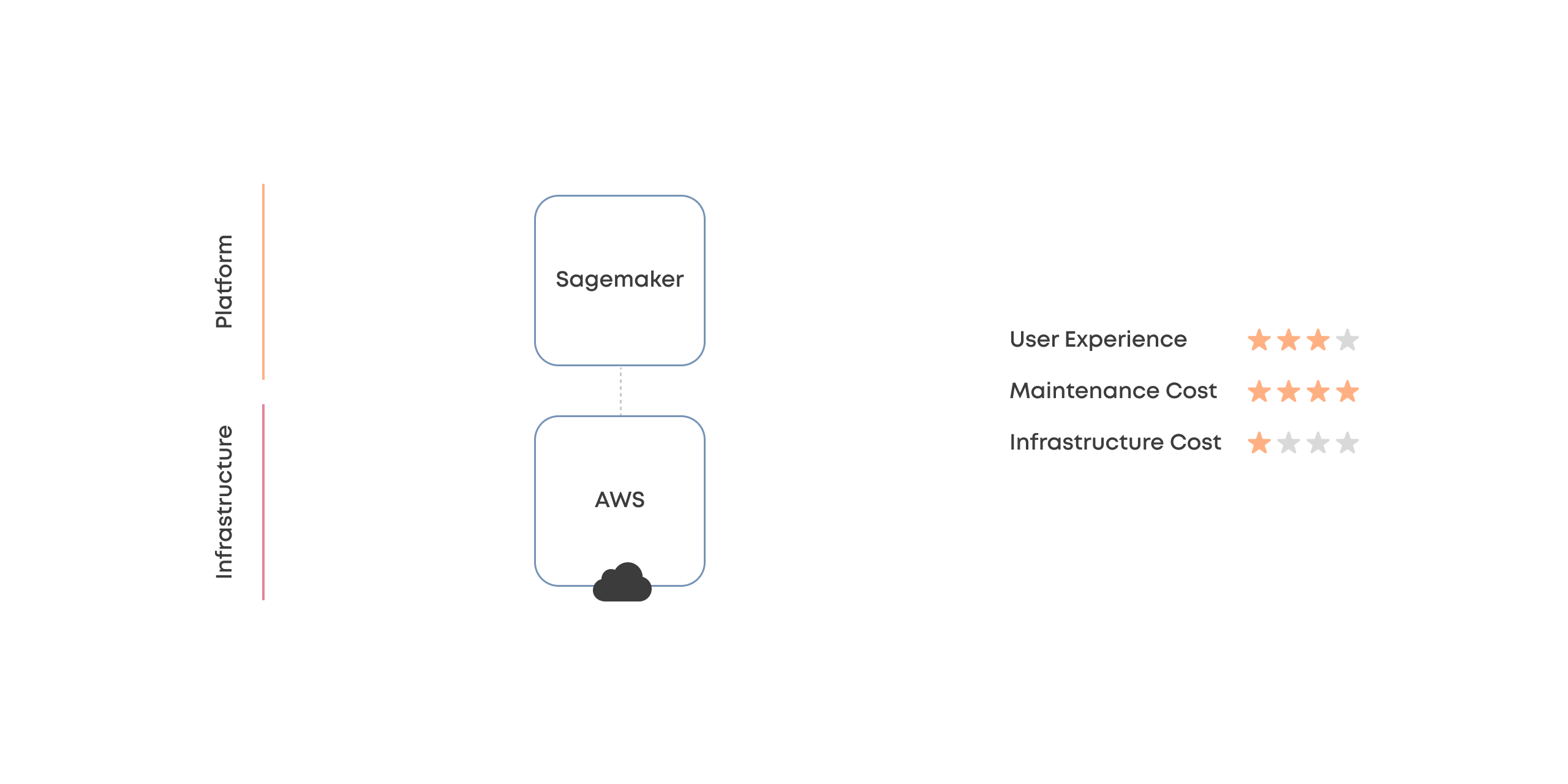

Status Quo

Single Public + Cloud Vendor Platform

Status quo is a single cloud vendor with their flavor of the ML platform, like Sagemaker.

This is where many ML practitioners are stuck today in local maxima. Centralizing all ML workloads on a single cloud provider limits complexity but also possibilities.

The most significant drawback is that teams cannot optimize for cost, even in use cases where a public cloud doesn’t inherently provide any additional value. In addition, cloud vendor ML platforms also introduce technical restrictions that may inhibit certain use cases.

Perspectives

Manager: "The costs are too high, and there is absolutely nothing we can do about it."

IT: "We are happy! Everything is easy in our favorite cloud."

Data Scientist: "Most things are straightforward and simple, but the cloud vendors' platform is not the snappiest, and the libraries are sometimes lacking."

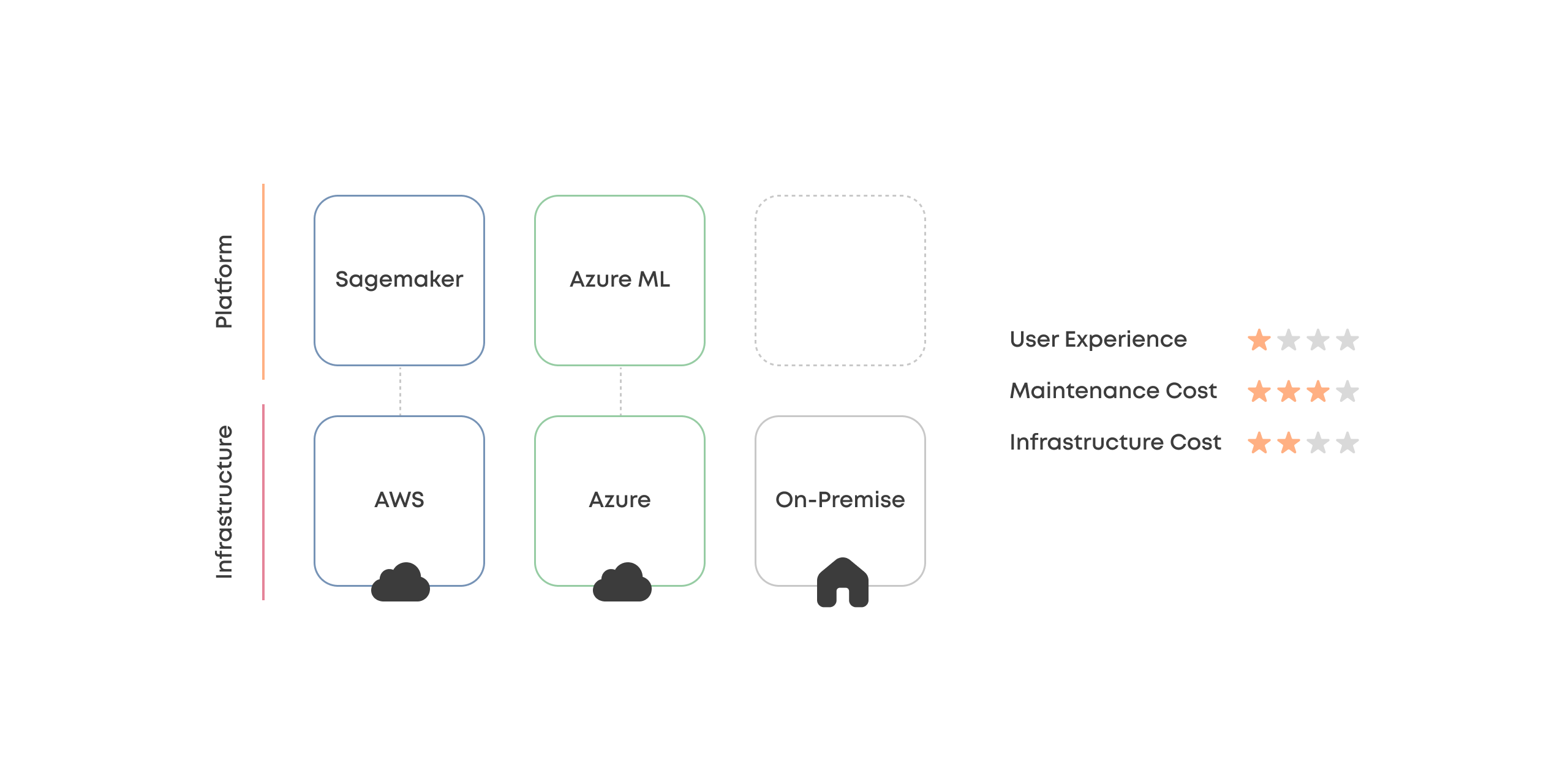

Option #1

Native Platforms

Using multiple cloud vendors with their multiple different ML platforms simultaneously, for example, on a per-project basis.

It is common for ML consultancies to end up here, as the clients have varying cloud vendors.

This approach opens up a lot of possibilities but suffers from complexity. For example, the team can’t build shared best practices because each cloud has its unique technological considerations for both IT and practitioners. Additionally, on-premise environments are hard to integrate into this model, limiting much of the cost leverage.

Perspectives

Manager: "More cost optimization opportunities, but mostly in theory. The individual projects are stuck in a single cloud anyway."

IT: "This is horror. We need to jump between multiple cloud vendors and their subtle differences. Complexity is through the roof."

Data Scientist: "I liked the simplicity of single cloud more. Now I need to learn multiple ways to do the same thing."

Option #2

No Platform

Tossing away the ML platforms and trying to use the hybrid cloud without abstraction layers.

While utilizing cloud and on-premise computing directly offers the most flexibility, it is impractical mainly due to the ineffective user experience for practitioners. Data scientists will need to be experts in cloud technologies or rely on DevOps/IT support from other team members leading to enormous overhead.

Perspectives

Manager: "I could optimize costs, but I have no centralized tools to do it."

IT: "Still horror. Need to manage multiple cloud vendors. Hard to find experienced people for Azure, too."

Data Scientist: "Impossible to get anything done as I'm constantly stuck with engineering. It's hard enough for me to use a single cloud without an ML platform."

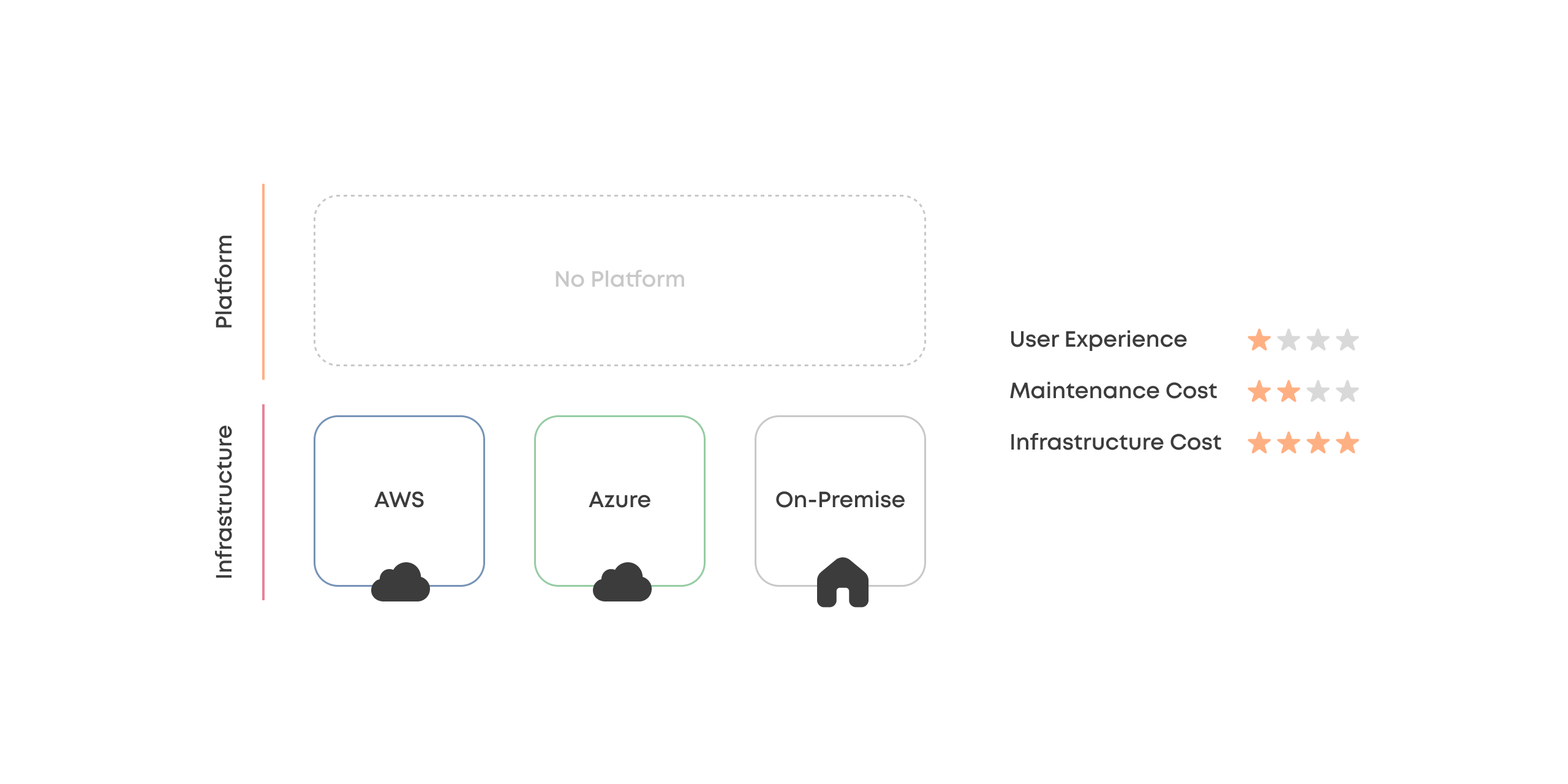

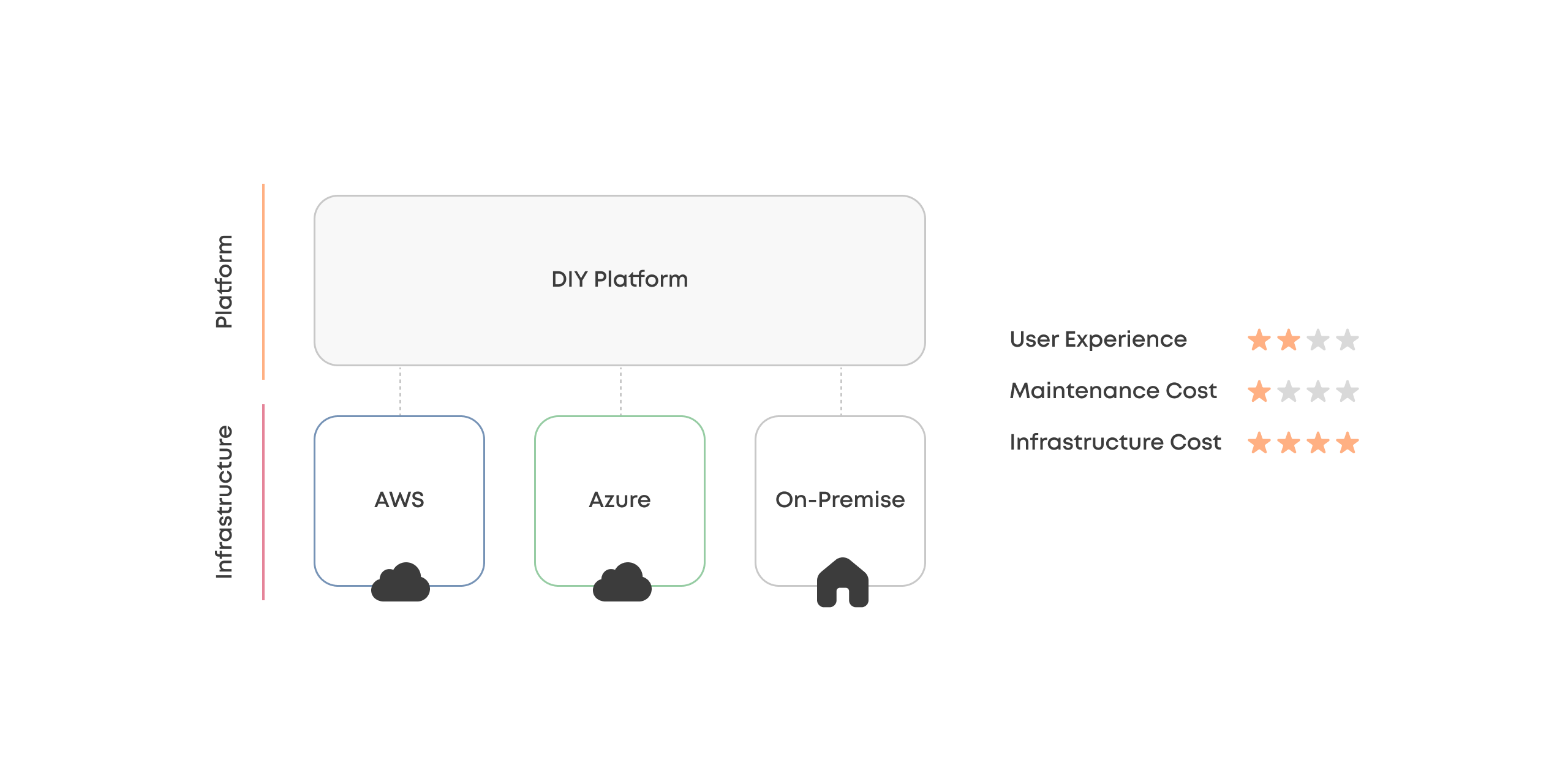

Option #3

DIY Platform

Building an in-house MLOps platform to manage it all is a common playbook in cutting-edge tech companies such as Uber, Netflix, Google, and Meta.

A DIY approach offers enormous flexibility for finessing the practitioners’ user experience and optimizing infrastructure costs at the expense of maintenance and development costs.

Even most enterprises lack the scale at which the maintenance and development costs can be justified for a non-core platform. Insufficient investment, in turn, will result in a sub-par experience for practitioners.

Perspectives

Manager: "I can finally monitor and optimize costs, but building our platform is slower and more expensive than we thought."

IT: "More separation between the data scientist and the engineering, but we are struggling with bugs and keeping up with the in-house MLOps platform."

Data Scientist: "It is nice to have our platform, which can be customized to our use cases, but it is unreliable and lacks features. I sometimes need to write cloud-specific solutions to work around its problems."

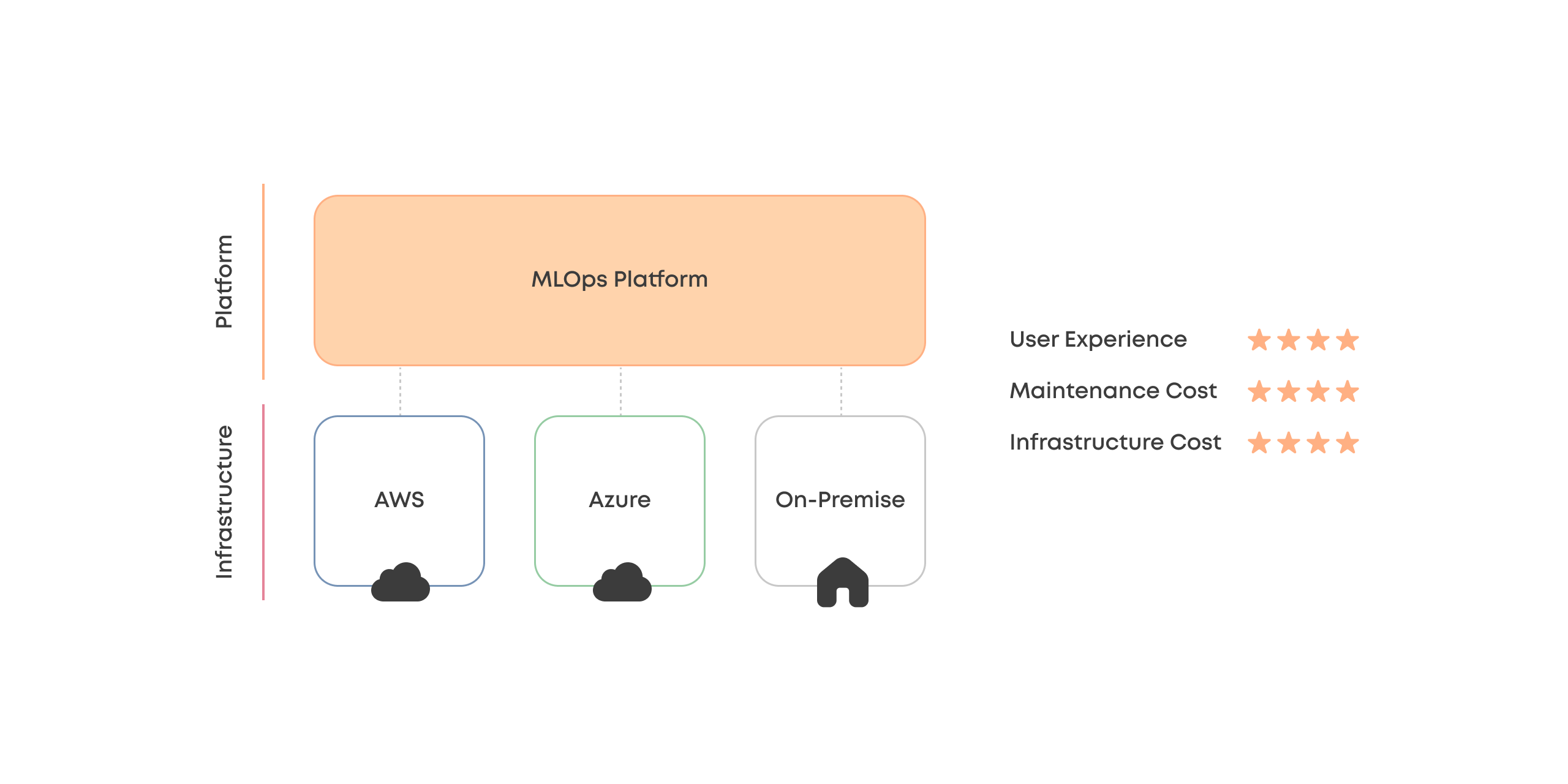

Option #4

Managed MLOps Platform

While hybrid cloud is necessary, it is very complicated and building an in-house MLOps platform is not core business for most companies.

The best option is managed MLOps platform (Valohai) as a unified abstraction layer between practitioners and their hybrid cloud infrastructure. This approach reduces the complexity from the usage and management point of view while opening up any number of options on the infrastructure side.

Perspectives

Manager: "I have a centralized place to optimize and monitor costs and projects. Loving it!"

IT: "We can manage all cloud resources from the same place, and the MLOps platform is reliable and extendable when we need to do something special. Very nice!"

Data Scientist: "I don't need to worry about different clouds anymore. Everything works! The UI is much snappier than what the cloud vendors had, too."

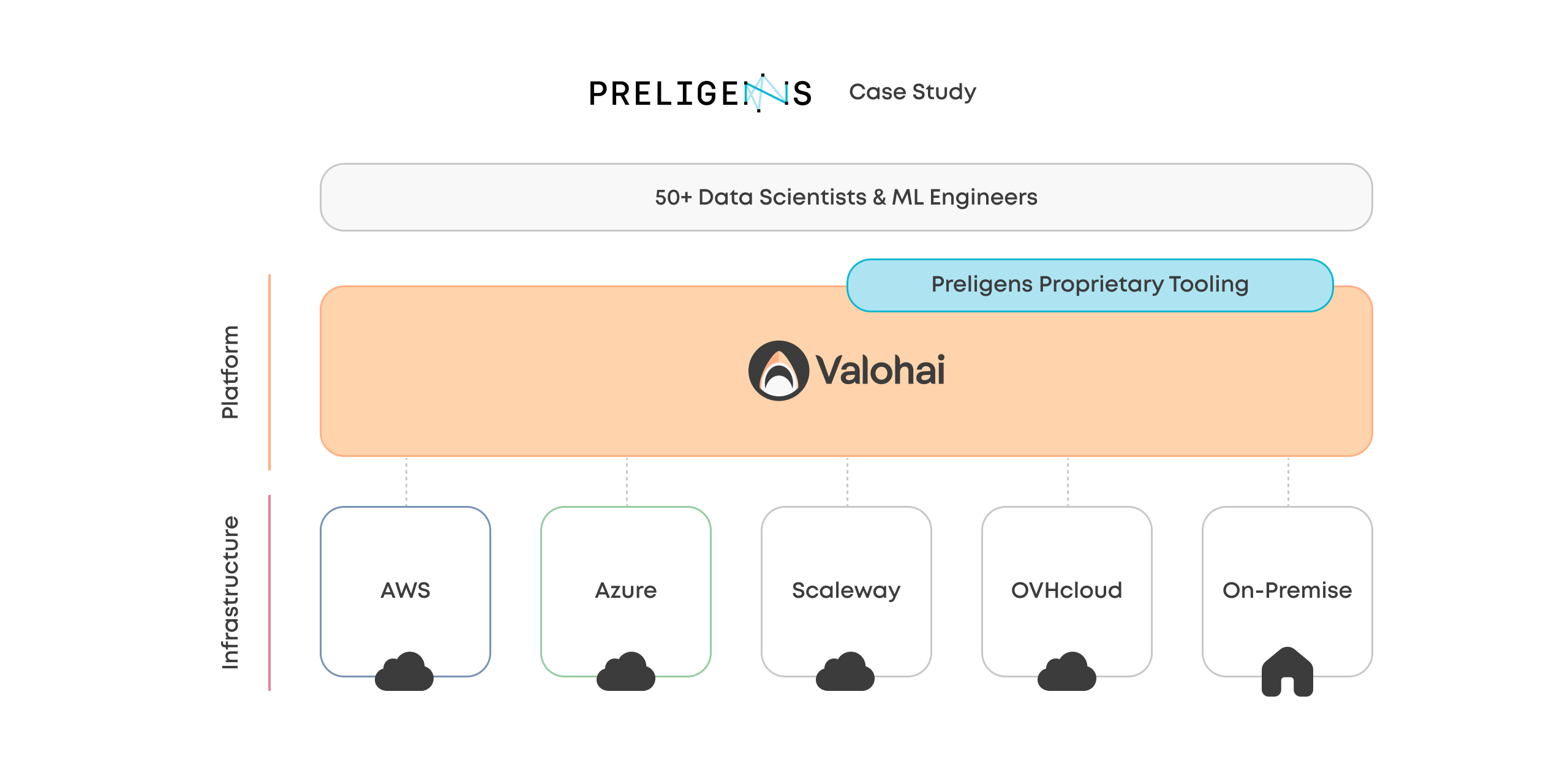

Preligens uses Valohai for hybrid cloud ML.

While many options exist for an ML multi-cloud strategy, most come short for the stakeholders.

Preligens is a company building software for analysts in the defense and intelligence space and Valohai MLOps platform functions as the infrastructure layer for their successful hybrid cloud strategy.

The alternative Preligens considered was the DIY path but found Valohai to be the more cost-effective and ideal solution:

Building a barebones infrastructure layer for our use case would have taken months, and that would just have been the beginning. The challenge is that with a self-managed platform, you need to build and maintain every new feature, while with Valohai, they come included.

Renaud Allioux Co-Founder & CTO, Preligens

Renaud Allioux Co-Founder & CTO, PreligensWith Valohai, Preligens can run ML workloads flexibly depending on regulatory requirements and cost-effectiveness. They have also built additional proprietary tooling on top of Valohai using its APIs without focusing on the infrastructure layer themselves.

Read more about Preligens’ hybrid cloud approach and experience with Valohai.

More about Valohai

Valohai is the MLOps platform purpose-built for ML Pioneers, giving them everything they've been missing in one platform that just makes sense. Now they run thousands of experiments at the click of a button, easily collaborate across teams and projects – all using the tools they love. Empowering the ML Pioneers build faster and deliver stronger products to the world.

Book a demo to learn more.

Valohai platform demo

Valohai platform demo Valohai platform factsheet

Valohai platform factsheet Practical MLOps eBook

Practical MLOps eBook