In our series of machine learning infrastructure blog posts, we recently featured Uber’s Michelangelo . Today we’re happy to be interviewing Ville Tuulos from Netflix. Ville is a machine learning infrastructure architect at Netflix’s Los Gatos, CA office.

For a dissection of Ville's introduction of Netflix's ML infrastructure check out our previous blogpost . But for now, let's jump to the interview:

Thank you Ville for joining us. Could you briefly tell us about your job at Netflix and what problems are you solving?

Thanks for having me! Netflix applies machine learning to hundreds of use cases across the company. A few years back we realized that although our ML use cases are very diverse, we could benefit from a common ML infrastructure which would help our data scientists to iterate on these projects faster and more effectively. My team got the mandate to design and build this infrastructure.

What kind of machine learning are you doing at Netflix? Any interesting use-cases you can mention?

Pretty much all areas of our business utilize ML in one form or another, which I find absolutely fascinating. Since the use cases are so diverse, we employ a wide spectrum of ML methods from classical statistics to deep learning.

When it comes to ML use cases, our personalized video recommendations at Netflix.com are well known but even more magic takes place behind the scenes. For instance, we use ML to optimize various elements of movie production and enhance the quality of streaming .

Cool. And thank you for the links. Definitely worth checking for everyone! How about the ML infrastructure then? What does it consist of? Is it all encapsulated behind a single service or do you have many infrastructures for various use-cases?

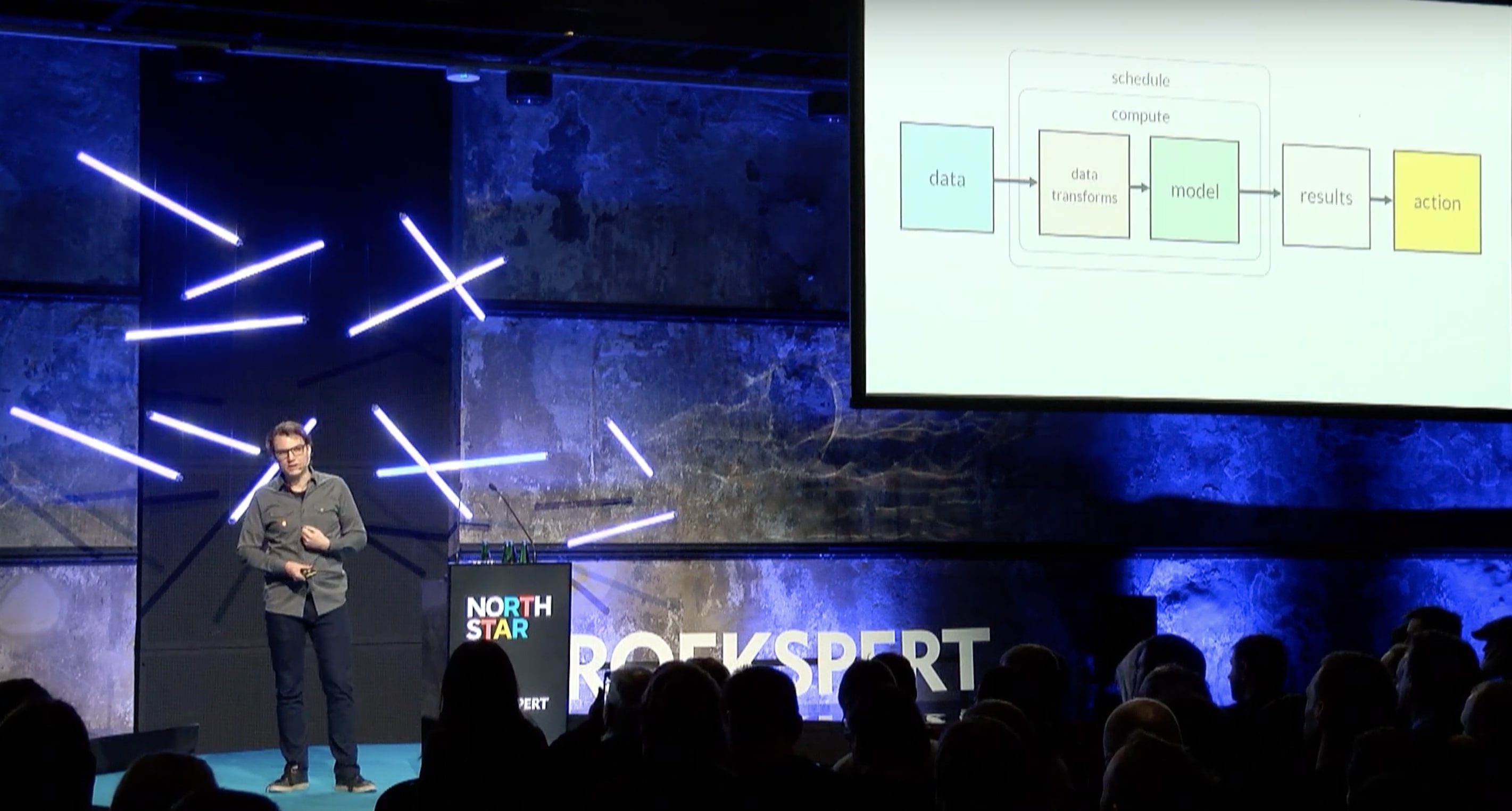

We have a sophisticated stack of infrastructure powering the member-facing Netflix.com. We have a separate infrastructure for internal use cases. The former stack is optimized for a focused set of high volume, high velocity use cases whereas the latter stack optimizes for a wide variety of different use cases.

Nice. How big is your team building the ML infrastructure? What roles do you have within the team?

We have 10+ people in the team currently. Netflix has an amazing culture which gives every engineer plenty of freedom and responsibility to execute their work autonomously. Hence we don’t have very prescriptive roles in the team. All of us have a background in big data systems, data science tooling, and/or applied machine learning.

What tools, frameworks, environments etc. do your Data Scientists use mostly? If very vast, just give a few examples of edge cases.

We have been developing an internal framework called Metaflow which gives our data scientists superpowers. Metaflow takes care of the hidden 80% of effort in data science projects which is not directly related to building models. When it comes to models, we use common off-the-shelf frameworks such as Scikit Learn, XGBoost, and Tensorflow.

We are also very active users and contributors in the Jupter notebook ecosystem .

Metaflow looks very interesting. Kinda the same thing Valohai does as well. Do you have one centralized data lake or various distinct data sources ? Where do you run your experiments? Own hardware or cloud?

Our main data lake is built on Amazon S3. We ingest upwards of a trillion events every day, so the scale is pretty massive.

Our compute resources are fully containerized, running on Titus, which runs on AWS. Titus is an open-source container management platform developed at Netflix . Our ML use cases are quite resource hungry, so having an elastic, highly scalable compute backed is a must.

What has been the hardest problems to solve so far in building your infrastructure?

We haven’t approached ML infrastructure as a technical problem primarily. Instead, we consider ML infrastructure as a productivity tool for data scientists. We have described our approach as Human-Centric Machine Learning Infrastructure .

Designing highly usable, delightful tools for humans is hard, especially in a new, uncharted domain like data science. There aren’t many great examples to follow out there. It seems that everyone is still figuring out what are the best practices and patterns.

Naturally there are many difficult technical challenges as well, especially related to our scale of operations but they feel more like “engineering as usual”.

How do the Data Scientists interface with you? “Daily and a lot” or “almost everything is automated and they don’t have to communicate with you as much”?

We work in close contact with our data scientists. The infrastructure we provide should empower them to be fully autonomous but first-class user support is something that we feel very strongly about. We want to understand all sources of friction in their daily life, both organizational and technical, and consider ways how we could remove the friction.

In practice, we have a very active Slack channel.

How about inference deployments, like making the predictions? E.g. do you use batch predictions on historical data for analysis or more like real-time predictions? How are the deployment endpoints monitored?

We support both batch predictions as well as real-time inferencing. Metaflow includes a built-in model hosting service which our data scientists can use easily even if they have no background in setting up scalable microservices.

Luckily we didn’t have to reinvent the wheel here. Netflix has been innovating a lot around cloud-based microservice architectures , so we have been able to leverage existing DevOps best practices in the world of ML.

Sounds very nice! Making Data Scientists autonomous is something we too feel is imperative in improving productivity. Netflix has always been a pioneer of large scale deployment testing. Do you have any interesting ideas you are implementing around ML testing?

Please ask me again next year! This is an active area of development for us.

Our working hypothesis is that there isn’t a one-size-fits-all approach when it comes to ML testing or monitoring. Instead, we want to provide easy building blocks and patterns for data scientists which they can use to tailor a testing approach that works for their use case.

Do things like data regulation (GDPR etc.) or requirements around gender or racial bias prevention commonly affect model pipelines in terms of testing or audit?

There are models that are more affected by these issues and some others less so, e.g. the streaming quality example I mentioned before.

Final few questions. What is the split between classical ML methods vs. DL methods in production today?

Our data scientists have the freedom to choose the modelling approach that makes the most sense for their domain. For instance, in NLP and computer vision, DL methods are often clear winners. In many other domains classical ML methods still have many upsides.

Sounds sound, so to speak. How do you envision the future of Netflix’ ML infrastructure? What is the ideal end goal?

I don’t think that this effort ever “ends” per se. Always when we hit a big goal, people get excited and empowered and their appetite grows to do even more, and we get to define a new, even more ambitious goal.

The big vision we have for ML infrastructure is that it should take a minimal amount of time to prototype a solid ML-based solution to any business problem, test it in real-life without friction, and if it works, push it to production with a click of a button.

Interestingly, it is possible to build infrastructure like this for many specific sets of business problems already today. The real challenge is to make this work for any problem. This requires a symbiotic relationship between humans who have the domain knowledge and machines that take care of the heavy lifting.

As some final words of wisdom for our readers: What’s your top tip for companies looking to build their own ML infrastructure?

Be cognizant of the technology maturity curve when it comes to ML infrastructure. Many areas of the ML ecosystem are still quite new and immature today but many parts of it will get commoditized over the next 5-10 years. Innovations of today can easily become tech debt of tomorrow.

This is one of the reasons why we are betting on the human-centric approach. The underlying platforms and techniques will evolve but we don’t believe that AI will replace data scientists and other domain experts in the foreseeable future.

Valohai is for your company what Ville's team is building for Netflix: Machine orchestration, MLOps automation, version control for Jupyter notebooks, production grade ML pipelines, a data dictionary and much more. Book a demo to see all its features and ask questions in private.